27 June 2008 to 20 April 2008

¶ The Case for Teaching Machines to Understand English · 27 June 2008 child/tech

Applied natural-language-processing work is motivated by the proposition that it's more practical to teach a few computers to understand human languages than it is to teach a lot of people (including every new one) to speak computer languages. The potential advantage, thus, is numeric and large.

So far, though, many people have learned to speak computer languages pretty well, and no computers have learned to understand human languages. Indeed, at this point it's still faster to teach computer languages to humans even if you have to create the humans from scratch first...

So far, though, many people have learned to speak computer languages pretty well, and no computers have learned to understand human languages. Indeed, at this point it's still faster to teach computer languages to humans even if you have to create the humans from scratch first...

1. Trinacria: "Make No Mistake"

Insanely inspired collaboration between viking-metal grind and noise-terror agit-processing, easily the most bracing thing I've heard in years.

2. Leviathan: "Receive the World"

Like being shredded in a slow-motion tornado filled with mid-explosion bombs and extrapolated nightmares.

3. Ihsahn: "Emancipation"

Progressive and necromantic at once, like court music from a Hades starting to gentrify just a little as it discovers its political strength.

4. Moonspell: "Dreamless (Lucifer and Lilith)"

Gothic metal's current standard-bearers.

5. Charon: "Deep Water"

A HIM to Moonspell's Sentenced.

6. Morgion: "Mundane"

A funeral march for glaciers.

7. Dalriada: "Tavaskzköszöntõ"

And at the end of the march, the unexpected moment when you are caught and carried off by sprint-pogoing goat-horned leprechauns and their seven-armed princess who only ever speaks backwards fast.

(All 14 in AAC; 65MB zip file)

Insanely inspired collaboration between viking-metal grind and noise-terror agit-processing, easily the most bracing thing I've heard in years.

2. Leviathan: "Receive the World"

Like being shredded in a slow-motion tornado filled with mid-explosion bombs and extrapolated nightmares.

3. Ihsahn: "Emancipation"

Progressive and necromantic at once, like court music from a Hades starting to gentrify just a little as it discovers its political strength.

4. Moonspell: "Dreamless (Lucifer and Lilith)"

Gothic metal's current standard-bearers.

5. Charon: "Deep Water"

A HIM to Moonspell's Sentenced.

6. Morgion: "Mundane"

A funeral march for glaciers.

7. Dalriada: "Tavaskzköszöntõ"

And at the end of the march, the unexpected moment when you are caught and carried off by sprint-pogoing goat-horned leprechauns and their seven-armed princess who only ever speaks backwards fast.

(All 14 in AAC; 65MB zip file)

¶ 7 Songs · 9 June 2008

By request:

"List seven songs you are into right now. No matter what the genre, whether they have words, or even if they're not any good, but they must be songs you're really enjoying now, shaping your spring. Post these instructions in your blog along with your 7 songs. Then tag 7 other people to see what they're listening to."

The tags: Bethany, Dennis, Heather, Dan, James, Alan, David.

The songs:

1. Frightened Rabbit: "Head Rolls Off"

:05 snippets of :30 clips is no way to fall in love with anything subtle. For Frightened Rabbit it took me a mix received and another one given to stop my scanning long enough to register dawning awe. This may be the best album of tragic-heroism since Del Amitri's Waking Hours, and "Head Rolls Off" is as defiantly hopeful a life anthem as anything since the Waterboys' "I Will Not Follow".

2. Alanis Morissette: "Underneath"

"We have the ultimate key to the cause right here." Alanis says awkward, earnest things that I also believe.

3. Jewel: "Two Become One"

If you don't have the courage to be kaleidoscopically foolish while you're still young enough, you won't have the chance to turn your "2"s into "Two"s when you're a little older, a little wiser, and a little less afraid of yourself.

4. Delays: "Love Made Visible"

Joy made audible.

5. Runrig: "Protect and Survive"

There can be echoes, inside blood, of decades and centuries and stone and water.

6. Okkervil River: "Plus Ones"

Not only probably the best gimmick song ever, but a gimmick song that actually ennobles other gimmick songs.

7. M83: "Graveyard Girl"

Best mid-80s synthpop song since the mid-80s. When my daughter is old enough to gripe about all the old crap I listen to from before she was alive, which thus can't possibly matter, I'll be able to tell her that at least she was around to dance with me to this.

"List seven songs you are into right now. No matter what the genre, whether they have words, or even if they're not any good, but they must be songs you're really enjoying now, shaping your spring. Post these instructions in your blog along with your 7 songs. Then tag 7 other people to see what they're listening to."

The tags: Bethany, Dennis, Heather, Dan, James, Alan, David.

The songs:

1. Frightened Rabbit: "Head Rolls Off"

:05 snippets of :30 clips is no way to fall in love with anything subtle. For Frightened Rabbit it took me a mix received and another one given to stop my scanning long enough to register dawning awe. This may be the best album of tragic-heroism since Del Amitri's Waking Hours, and "Head Rolls Off" is as defiantly hopeful a life anthem as anything since the Waterboys' "I Will Not Follow".

2. Alanis Morissette: "Underneath"

"We have the ultimate key to the cause right here." Alanis says awkward, earnest things that I also believe.

3. Jewel: "Two Become One"

If you don't have the courage to be kaleidoscopically foolish while you're still young enough, you won't have the chance to turn your "2"s into "Two"s when you're a little older, a little wiser, and a little less afraid of yourself.

4. Delays: "Love Made Visible"

Joy made audible.

5. Runrig: "Protect and Survive"

There can be echoes, inside blood, of decades and centuries and stone and water.

6. Okkervil River: "Plus Ones"

Not only probably the best gimmick song ever, but a gimmick song that actually ennobles other gimmick songs.

7. M83: "Graveyard Girl"

Best mid-80s synthpop song since the mid-80s. When my daughter is old enough to gripe about all the old crap I listen to from before she was alive, which thus can't possibly matter, I'll be able to tell her that at least she was around to dance with me to this.

¶ Never Mind the Semantic Web (or, 13 Reasons Not to Let a Computer Scientist Choose a Name (or a Problem)) · 3 June 2008 essay/tech

1. "Semantic". By starting the name this way, you have essentially, avoidably, uselessly doomed the whole named enterprise before it starts. Most people don't have the slightest idea what this word even means, most of the people who do have an idea think it implies pointless distinctions, and everybody left after you eliminate those two groups will still have to argue about what "semantic" means. This is a rare actual example of begging the question. Or to put it in terms you will understand: congratulations, you've introduced terminological head recursion. Any wonder the program never gets around to doing anything?

2. "The Semantic Web". The "The" and the "Web" and the capitalization combine to suggest, even before anybody compounds the error by stating it explicitly, that this thing, which nobody can coherently explain, is intended to compete with a thing we already grok and see and fetishize. But this is totally not the point. The web is good. What we're talking about are new tools for how computers work with data. Or, really, what we're talking about are actually old tools for working with data, but ones that a) weren't as valuable or critical until the web made us more aware of our data and more aware of how badly it is serving us, and b) weren't as practical to implement until pretty recently in processor-speed and memory-size history.

3. "FOAF". There have been worse acronyms, obviously, but this one is especially bad for the mildness of its badness. It sounds like some terrible dessert your friends pressured you to eat at a Renaissance Festival after you finally finished gnawing your baseball-bat-sized Turkey Sinew to death.

4. FOAF as the stock example. You could have started anywhere, and almost any other start would have been better for explaining the true linked nature of data than this. "Friend" is the second farthest thing from a clean semantic annotation in anybody's daily experience. I'm barely in control of the meaning of my own friend lists, and certain wouldn't do anything with anybody else's without human context.

5. Tagging as the stock example. "Tagged as" is the first farthest thing from a clean semantic annotation in anybody's daily experience.

6. Blogging as the stock example. Even if your hand-typed RDFa annotations are nuggets of precious ontological purity, you can't generate enough of them by hand to matter. Your writing is for humans, not machines, and wasting brains the size of planets on chasing pingbacks is squandering electricity. We already know how to add to humanity's knowledge one fact at a time. The problem is in grasping the facts en masse, in turning data to information to knowledge to wisdom to the icecaps not melting on us.

7. Anything AI. Natural-language-processing and entity-extraction are interesting information-science problems, and somebody, somewhere, probably ought to be working on them. But those tools are going to pretty much suck for general-purpose uses for a really long time. So keep them out of our way while we try to actually improve the world in the meantime.

8. "Giant Global" Graph. The "Giant" and "Global" parts are menacing and unnecessary, and maybe ultimately just wrong. In data-modeling, the more giant and global you try to be, the harder it is to accomplish anything. What we're trying to do is make it possible to connect data at the point where humans want it to connect, not make all data connected. We're not trying to build one graph any more than the World Wide Web was trying to build one site.

9. Giant Global "Graph". This is a classic jargon failure: using an overloaded term with a normal meaning that makes sense in most of the same sentences. I don't know the right answer to this one, since "web" and "network" and "mesh" and "map" are all overloaded, too. We may have to use a new term here just so people know we're talking about something new. "Nodeset", possibly. "Graph" is particularly bad because it plays into the awful idea that "visualization" is all about turning already-elusive meaning into splendidly gradient-filled, non-question-answering splatter-plots.

10. URIs. Identifying things is a terrific idea, but "Uniform" is part of the same inane pipe-dream distraction as "Giant" and "Global", and "Resource" and the associated crap about protocols and representations munge together so many orthogonal issues that here again the discussions all end up being Zenotic debates over how many pins can be shoved halfway up which dancing angel.

11. "Metadata". There is no such thing as "metadata". Everything is relative. Everything is data. Every bit of data is meta to everything else, and thus to nothing. It doesn't matter whether the map "is" the terrain, it just matters that you know you're talking about maps when you're talking about maps. (And it usually doesn't matter if the computer knows the difference, regardless...)

12. RDF. It's insanely brilliant to be able to represent any kind of data structure in a universal lowest-common-denominator form. It's just insane to think that this particular brilliance is of pressing interest to anybody but data-modeling specialists, any more than hungry people want to hear your lecture about the atomic structure of food before they eat. RDF will be the core of the new model in the same way that SGML was the core of the web.

13. The Open-World Hypothesis. See "Global", above. Acknowledging the ultimate unknowability of knowledge is a profound philosophical and moral project, but not one for which we need computer assistance. Meanwhile, computers could be helping us make use of what we do know in all our little worlds that are already more than closed enough.

2. "The Semantic Web". The "The" and the "Web" and the capitalization combine to suggest, even before anybody compounds the error by stating it explicitly, that this thing, which nobody can coherently explain, is intended to compete with a thing we already grok and see and fetishize. But this is totally not the point. The web is good. What we're talking about are new tools for how computers work with data. Or, really, what we're talking about are actually old tools for working with data, but ones that a) weren't as valuable or critical until the web made us more aware of our data and more aware of how badly it is serving us, and b) weren't as practical to implement until pretty recently in processor-speed and memory-size history.

3. "FOAF". There have been worse acronyms, obviously, but this one is especially bad for the mildness of its badness. It sounds like some terrible dessert your friends pressured you to eat at a Renaissance Festival after you finally finished gnawing your baseball-bat-sized Turkey Sinew to death.

4. FOAF as the stock example. You could have started anywhere, and almost any other start would have been better for explaining the true linked nature of data than this. "Friend" is the second farthest thing from a clean semantic annotation in anybody's daily experience. I'm barely in control of the meaning of my own friend lists, and certain wouldn't do anything with anybody else's without human context.

5. Tagging as the stock example. "Tagged as" is the first farthest thing from a clean semantic annotation in anybody's daily experience.

6. Blogging as the stock example. Even if your hand-typed RDFa annotations are nuggets of precious ontological purity, you can't generate enough of them by hand to matter. Your writing is for humans, not machines, and wasting brains the size of planets on chasing pingbacks is squandering electricity. We already know how to add to humanity's knowledge one fact at a time. The problem is in grasping the facts en masse, in turning data to information to knowledge to wisdom to the icecaps not melting on us.

7. Anything AI. Natural-language-processing and entity-extraction are interesting information-science problems, and somebody, somewhere, probably ought to be working on them. But those tools are going to pretty much suck for general-purpose uses for a really long time. So keep them out of our way while we try to actually improve the world in the meantime.

8. "Giant Global" Graph. The "Giant" and "Global" parts are menacing and unnecessary, and maybe ultimately just wrong. In data-modeling, the more giant and global you try to be, the harder it is to accomplish anything. What we're trying to do is make it possible to connect data at the point where humans want it to connect, not make all data connected. We're not trying to build one graph any more than the World Wide Web was trying to build one site.

9. Giant Global "Graph". This is a classic jargon failure: using an overloaded term with a normal meaning that makes sense in most of the same sentences. I don't know the right answer to this one, since "web" and "network" and "mesh" and "map" are all overloaded, too. We may have to use a new term here just so people know we're talking about something new. "Nodeset", possibly. "Graph" is particularly bad because it plays into the awful idea that "visualization" is all about turning already-elusive meaning into splendidly gradient-filled, non-question-answering splatter-plots.

10. URIs. Identifying things is a terrific idea, but "Uniform" is part of the same inane pipe-dream distraction as "Giant" and "Global", and "Resource" and the associated crap about protocols and representations munge together so many orthogonal issues that here again the discussions all end up being Zenotic debates over how many pins can be shoved halfway up which dancing angel.

11. "Metadata". There is no such thing as "metadata". Everything is relative. Everything is data. Every bit of data is meta to everything else, and thus to nothing. It doesn't matter whether the map "is" the terrain, it just matters that you know you're talking about maps when you're talking about maps. (And it usually doesn't matter if the computer knows the difference, regardless...)

12. RDF. It's insanely brilliant to be able to represent any kind of data structure in a universal lowest-common-denominator form. It's just insane to think that this particular brilliance is of pressing interest to anybody but data-modeling specialists, any more than hungry people want to hear your lecture about the atomic structure of food before they eat. RDF will be the core of the new model in the same way that SGML was the core of the web.

13. The Open-World Hypothesis. See "Global", above. Acknowledging the ultimate unknowability of knowledge is a profound philosophical and moral project, but not one for which we need computer assistance. Meanwhile, computers could be helping us make use of what we do know in all our little worlds that are already more than closed enough.

Frightened Rabbit: "The Modern Leper" (1.8M mp3)

Irresistible loss, concussive modernity and Scottish rain.

(via a birthday mix from B!)

Irresistible loss, concussive modernity and Scottish rain.

(via a birthday mix from B!)

Eventually, probably, we will figure out how to have computers make some kind of sense of human language. That will be cool and useful, and will change things.

But it's a hard problem, and in the short term I think much of the work required is mostly harder than it is valuable. The big current problems I care about in information technology involve letting computers do things computers are already good at, not beating human heads against them hoping they'll become more human out of sympathy.

So I'm already not the most receptive audience for Powerset, the latest attempt at "improving search" via natural-language processing. I don't think "search" is the problem, to begin with, and I don't think "searching" by typing sentences in English is an improvement even if it works.

And I don't think it works. But make up your own mind. I put together a very simple comparison page for running a search on Powerset and Google side-by-side. And then I ran some. Like these:

what's the closest star?

who was the King of England in 1776?

what movie were Gena Rowlands and Michael J. Fox in together?

new MacBook Pros today?

who are the members of Apple's board of directors?

what's the population of Puerto Rico?

when is Father's Day?

what was the last major earthquake in Tokyo?"

bands like Enslaved

who is Nightwish's new singer?

who is Anette Olzon?

and then, because Powerset suggested it:

who is Anette Olson?

and then, because Google suggested it:

who is Annette Olson?

I think, given these results, it's very hard to argue that Powerset's NLP is doing us much good. At least, not yet. And I'm not their (or anybody's) VC, but I wouldn't be betting a team of salaries that it's going to any time soon.

[12 August 2008 note: the above queries are all still live, and some generate different results today than they did when I posted this. Powerset now gets Anette Olzon, although they still also suggest Anette Olson despite having no interesting results for it, and it still takes Google to suggest fixing Anette Olson to Annette Olson.

The most bizarre new result, though, is that currently the Powerset query-result page for "what movie were Gena Rowlands and Michael J. Fox in together?" is itself the top hit in Google for that query.]

But it's a hard problem, and in the short term I think much of the work required is mostly harder than it is valuable. The big current problems I care about in information technology involve letting computers do things computers are already good at, not beating human heads against them hoping they'll become more human out of sympathy.

So I'm already not the most receptive audience for Powerset, the latest attempt at "improving search" via natural-language processing. I don't think "search" is the problem, to begin with, and I don't think "searching" by typing sentences in English is an improvement even if it works.

And I don't think it works. But make up your own mind. I put together a very simple comparison page for running a search on Powerset and Google side-by-side. And then I ran some. Like these:

what's the closest star?

who was the King of England in 1776?

what movie were Gena Rowlands and Michael J. Fox in together?

new MacBook Pros today?

who are the members of Apple's board of directors?

what's the population of Puerto Rico?

when is Father's Day?

what was the last major earthquake in Tokyo?"

bands like Enslaved

who is Nightwish's new singer?

who is Anette Olzon?

and then, because Powerset suggested it:

who is Anette Olson?

and then, because Google suggested it:

who is Annette Olson?

I think, given these results, it's very hard to argue that Powerset's NLP is doing us much good. At least, not yet. And I'm not their (or anybody's) VC, but I wouldn't be betting a team of salaries that it's going to any time soon.

[12 August 2008 note: the above queries are all still live, and some generate different results today than they did when I posted this. Powerset now gets Anette Olzon, although they still also suggest Anette Olson despite having no interesting results for it, and it still takes Google to suggest fixing Anette Olson to Annette Olson.

The most bizarre new result, though, is that currently the Powerset query-result page for "what movie were Gena Rowlands and Michael J. Fox in together?" is itself the top hit in Google for that query.]

¶ Three From One · 5 May 2008 child/photo

¶ Who Profits From Your Fears? · 5 May 2008

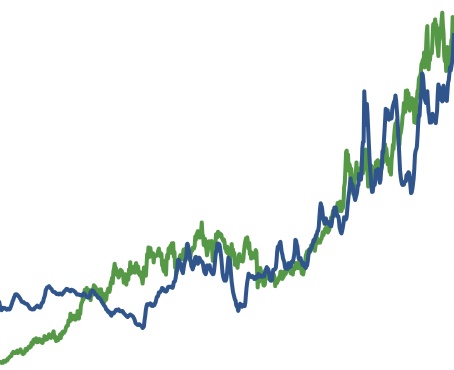

15 years of gas prices and Exxon/Mobil stock.

The core of the "semantic web" idea, at least as far as I'm concerned, is that we're trying to do for data what the first web did for pages. We're trying to make dataspaces, both individual and aggregate, that can be explored and analyzed both by people directly, and by machines on our behalf. The people half of this, at least, is not mysterious or obscure or even speculative. It looks like IMDb, or any other site where there's pretty much a page for each individual thing, and you can click your way from every thing to everything else.

The machine part is more complicated, but only by a little. Instead of regular old-web links, which just tell the computer where to go, a "semantic" link also says what it means to go there. So the old-web page for Rush Hour 3 links to Jackie Chan and Chris Tucker, but also to ads and the IMDb front page and job-listings for IMDb.com, and as far as the machines can tell, these links are all essentially equal. When IMDb gets their act from web 2.0 to 3.0, the links will be annotated so that the ones that go to Jackie and Chris and the other cast members are labeled "actor", and the other links aren't, and then you can ask a question less like "What web pages mention the words 'Jackie' and 'Chan' and 'older'?" and more like "How many people in that movie were older than him, anyway?", and the machines might have enough material to figure it out for you.

And that, and not coruscating pie-charts, is how you'll start to recognize the pieces of the new web as it begins to emerge: its sites will help you get real answers to real questions without you having to get out scratch-paper and click a hundred links yourself. The more time you spend thinking about this idea, I believe, the more revolutionary you'll realize it is. In terms of how computers augment human capacities for understand information, the jump from the regular web to the semantic web will be a bigger deal than the jump from magazines and books and newspapers to the web. Maybe bigger by a lot.

Which is why I was excited to finally get an invitation to the private beta program for Twine, despite basically not knowing what it was. My wildly hopeful guess, from the pre-release hints about "personal information", had been that Twine might be the long-awaited reincarnation of the soul of Lotus Agenda, a personal information management program in a world where a lot more people now have enough information piling up around them for "managing" it to be a generalizable problem.

Twine, it turns out, at least so far, is a social bookmarking application. Bookmarking is not exactly what I meant by information mangement, any more than daytimer+contacts is what I meant by it in 1992. I gather that there is semantic-web technology behind Twine, somewhere, and I think this is supposed to make the "other tags" Twine recommends for your bookmarks better than the other tags del.icio.us recommends, or the other feeds Google Reader recommends, or the microwave that Amazon tells you was purchased by other people who pre-ordered a Douglas Coupland novel. Or it's supposed to eventually make this true, anyway, some day when/if there are more bookmarks and more people in Twine, which is after all still "in beta", which means that you're supposed to imagine that it will eventually get smart about everything it's currently dumb about.

And in Twine's case, this might eventually make it a really good social bookmarking application. If so, I will happily switch from del.icio.us to Twine for my minimal and basically expendable social-bookmarking needs.

But as an ambassador for the Semantic Web, Twine is an embarrassment. Or, maybe more accurately, it's embarrassed. It buries its semantic-web-ness inside, like it's the information-technology version of oat bran, and the reaction they're going for is "Oh, these donuts taste so good you'd barely know they had any Semantic Web in them!" But oat bran doesn't keep donuts from being junk food, and RDF-storage and named-entity-extraction doesn't make social-booking any less page-oriented.

And I probably wouldn't care if Nova hadn't set up so much semantic-web context around himself and his company and their product. But we've collectively screwed up the presentation of this simple idea about how the next web will be better, somehow, and a lot of people have become convinced that the semantic web is some kind of clanking information C-3PO from an idiot-fantasy future, complaining about etiquette and waddling like it has a Commodore 64 wedged up its ass. So for a little while, at least, anybody working on the tools for building the new web is automatically an apologist learning how to be an evangelist instead. So I want everything that says Semantic Web on it to point clearly to the way the future is really and simply better. I don't want it to look like NLP alchemy, or like temperamental magic someone is trying to use in place of levers or pulleys or Perl. And I especially don't want it to look like some old thing that most people already didn't need.

But then, this is the standard I will be held to, too, if we manage to build and ship the semantic-web application I'm working on. I want to be part of the way the world gets better, and to do something that is not embarrassed of the future it is helping to build. We'll see.

The machine part is more complicated, but only by a little. Instead of regular old-web links, which just tell the computer where to go, a "semantic" link also says what it means to go there. So the old-web page for Rush Hour 3 links to Jackie Chan and Chris Tucker, but also to ads and the IMDb front page and job-listings for IMDb.com, and as far as the machines can tell, these links are all essentially equal. When IMDb gets their act from web 2.0 to 3.0, the links will be annotated so that the ones that go to Jackie and Chris and the other cast members are labeled "actor", and the other links aren't, and then you can ask a question less like "What web pages mention the words 'Jackie' and 'Chan' and 'older'?" and more like "How many people in that movie were older than him, anyway?", and the machines might have enough material to figure it out for you.

And that, and not coruscating pie-charts, is how you'll start to recognize the pieces of the new web as it begins to emerge: its sites will help you get real answers to real questions without you having to get out scratch-paper and click a hundred links yourself. The more time you spend thinking about this idea, I believe, the more revolutionary you'll realize it is. In terms of how computers augment human capacities for understand information, the jump from the regular web to the semantic web will be a bigger deal than the jump from magazines and books and newspapers to the web. Maybe bigger by a lot.

Which is why I was excited to finally get an invitation to the private beta program for Twine, despite basically not knowing what it was. My wildly hopeful guess, from the pre-release hints about "personal information", had been that Twine might be the long-awaited reincarnation of the soul of Lotus Agenda, a personal information management program in a world where a lot more people now have enough information piling up around them for "managing" it to be a generalizable problem.

Twine, it turns out, at least so far, is a social bookmarking application. Bookmarking is not exactly what I meant by information mangement, any more than daytimer+contacts is what I meant by it in 1992. I gather that there is semantic-web technology behind Twine, somewhere, and I think this is supposed to make the "other tags" Twine recommends for your bookmarks better than the other tags del.icio.us recommends, or the other feeds Google Reader recommends, or the microwave that Amazon tells you was purchased by other people who pre-ordered a Douglas Coupland novel. Or it's supposed to eventually make this true, anyway, some day when/if there are more bookmarks and more people in Twine, which is after all still "in beta", which means that you're supposed to imagine that it will eventually get smart about everything it's currently dumb about.

And in Twine's case, this might eventually make it a really good social bookmarking application. If so, I will happily switch from del.icio.us to Twine for my minimal and basically expendable social-bookmarking needs.

But as an ambassador for the Semantic Web, Twine is an embarrassment. Or, maybe more accurately, it's embarrassed. It buries its semantic-web-ness inside, like it's the information-technology version of oat bran, and the reaction they're going for is "Oh, these donuts taste so good you'd barely know they had any Semantic Web in them!" But oat bran doesn't keep donuts from being junk food, and RDF-storage and named-entity-extraction doesn't make social-booking any less page-oriented.

And I probably wouldn't care if Nova hadn't set up so much semantic-web context around himself and his company and their product. But we've collectively screwed up the presentation of this simple idea about how the next web will be better, somehow, and a lot of people have become convinced that the semantic web is some kind of clanking information C-3PO from an idiot-fantasy future, complaining about etiquette and waddling like it has a Commodore 64 wedged up its ass. So for a little while, at least, anybody working on the tools for building the new web is automatically an apologist learning how to be an evangelist instead. So I want everything that says Semantic Web on it to point clearly to the way the future is really and simply better. I don't want it to look like NLP alchemy, or like temperamental magic someone is trying to use in place of levers or pulleys or Perl. And I especially don't want it to look like some old thing that most people already didn't need.

But then, this is the standard I will be held to, too, if we manage to build and ship the semantic-web application I'm working on. I want to be part of the way the world gets better, and to do something that is not embarrassed of the future it is helping to build. We'll see.