20 July 2005 to 30 June 2005

¶ 20 July 2005

After playing the Waltham album and the bonus EP a couple times each, I'm even more thrilled. This is basically the 2005-production band version of 80s solo Rick Springfield. If that doesn't seem like a good idea to you, it probably won't sound like one either.

Waltham: "Cheryl (Come and Take a Ride)" (1.9M mp3)

I haven't even listened to the rest of the album yet, this is just track 1.

I haven't even listened to the rest of the album yet, this is just track 1.

¶ Bored? · 11 July 2005

Come talk about movies...

And lastly, if for no other reason than that the time-stamps on our photos are the only journal I kept this time, the stubbornly and inexplicably interested are cautiously and apologetically welcome to click as fast as possible through the otherwise potentially interminable Japan+Bali 2005 extended instrumental all-plot remix.

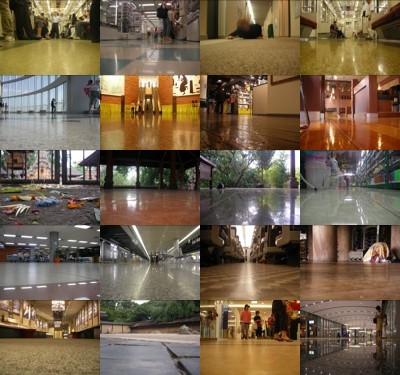

Tokyo Metro, Tsukiji to Omote-Sando

Tokyu Hands, Shibuya

Nishi Shinjuku Hotel, Shinjuku

Tokyo Metro, Asakusa to Shinjuku

Mori Center 50F, Roppongi Hills

Mori Center 52F, Roppongi Hills

Shin-Yokohama Ramen Museum, Shin-Yokohama

Pronto, Shinjuku

Pura Dalem Agung, Ubud

Segara Giri Kencana, Menjangan Island

Taman Sari, Pemuteran

Delta Dewata supermarket, Ubud

Ngurah Rai Airport, Denpasar

shinkansen platform, Shinagawa

Hikari shinkansen, Shinagawa to Kyoto

Kiyomizu-dera, Kyoto

Kyoto Museum for World Peace, Ritsumeikan University, Kyoto

Ryoanji, Kyoto

OPA department store, Kyoto

Shinagawa station, Tokyo

toro and hamachi, Daiwa Sushi, Tsukiji

breakfast hotdog, Presto, East Shinjuku

unidentified pastry, Presto, East Shinjuku

takoyaki, sidewalk cart, Ueno Park

Japan half-and-half, Bar Del Sole, Roppongi

bi bim bap and bulgogi, Saikabo, Shinjuku My City

ramen, Komurasaki, Shin-Yokohama Ramen Museum

lukewarm soup, unnamed warung, Candi Kuning

banana fritter, Ngiring Ngawedang, Munduk

palm-sugar crepes, Taman Sari, Pemuteran

Pocari Sweat, boat off Menjangan Island

nasi goreng ananda, Ananda, Candi Kuning

random ekiben #1, Shinagawa

random ekiben #2, Shinagawa

ramen, near Kinkakuji, Kyoto

tea spigot, Musashi kaitenzushi, Kyoto

¶ 30 June 2005

Zapruder Point: The O.M. (0.9M mp3)

from It's Always the Quiet Ones

I loaded the CD-changer in my car before the trip, so interspersed with all the new Japanese pop and trip-photo sorting and back-at-work denial and willful continuing other-cultural immersion, I've been hearing a few records I was pondering before I left. I'm dancing around to ultra-produced big-corporate techno-pop in a language I only sporadically understand and even less often actually empathize with, but I'm also standing still, humming small, wistful, under-produced, uncalculated reasons for being home to feel right.

from It's Always the Quiet Ones

I loaded the CD-changer in my car before the trip, so interspersed with all the new Japanese pop and trip-photo sorting and back-at-work denial and willful continuing other-cultural immersion, I've been hearing a few records I was pondering before I left. I'm dancing around to ultra-produced big-corporate techno-pop in a language I only sporadically understand and even less often actually empathize with, but I'm also standing still, humming small, wistful, under-produced, uncalculated reasons for being home to feel right.