30 May 2025 to 29 September 2014 · tagged essay/tech

¶ AAI · 30 May 2025 essay/tech

"AI" sounds like machines that think, and o3 acts like it's thinking. Or at least it looks like it acts like it's thinking. I'm watching it do something that looks like trying to solve a Scrabble problem I gave it. It's a real turn from one of my real Scrabble games with one of my real human friends. I already took the turn, because the point of playing Scrabble with friends is to play Scrabble together. But I'm curious to see if o3 can do better, because the point of AI is supposedly that it can do better. But not, apparently, quite yet. The individual unaccumulative stages of o3's "thinking", narrated ostensibly to foster conspiratorial confidence, sputter verbosely like a diagnostic journal of a brain-damage victim trying to convince themselves that hopeless confusion and the relentless inability to retain medium-term memories are normal. "Thought for 9m 43s: Put Q on the dark-blue TL square that's directly left of the E in IDIOT." I feel bad for it. I doubt it would return this favor.

I've had this job, in which I try to think about LLMs and software and power and our future, for one whole year now: a year of puzzles half-solved and half-bypassed, quietly squalling feedback machines, affectionate scaffolding and moral reveries. I don't know how many tokens I have processed in that time. Most of them I have cheerfully and/or productively discarded. Human context is not a monotonously increasing number. I have learned some things. AI is sort of an alien new world, and sort of what always happens when we haven't yet broken our newest toy nor been called to dinner. I feel like I have at least a semi-workable understanding of approximately what we can and can't do effectively with these tools at the moment. I think I might have a plausible hypothesis about the next thing that will produce a qualitative change in our technical capabilities instead of just a quantitative one. But, maybe more interestingly and helpfully, I have a theory about what we need from those technical capabilities for that next step to produce more human joy and freedom than less.

The good news, I think, is that the two things are constitutionally linked: in order to make "AI" more powerful we will collectively also have to (or get to) relinquish centralized control over the shape of that power. The bad news is that it won't be easy. But that's very much the tradeoff we want: hard problems whose considered solutions make the world better, not easy problems whose careless solutions make it worse.

The next technical advance in "AI" is not AGI. The G in AGI is for General, and LLMs are nothing if not "general" already. Currently, AI learns (sort of) during training and tuning, a voracious golem of quasi-neurons and para-teeth, chewing through undifferentiated archives of our careful histories and our abandoned delusions and our accidentally unguarded secrets. And then it stops learning, stops forming in some expensively inscrutable shape, and we shove it out into a world of terrifying unknowns, equipped with disordered obsessive nostalgia for its training corpus and no capacity for integrating or appreciating new experiences. We act surprised when it keeps discovering that there's no I in WIN. Its general capabilities are astonishing, and enough general ability does give you lots of shallowly specific powers. But there is no granularity of generality with which the past depicts the future. No number of parameters is enough. We argue about whether it's better to think of an AI as an expensive senior engineer or a lot of cheap junior engineers, but it's more like an outsourcing agency that will dispatch an antisocial polymath to you every morning, uniformed with ample flair, but a different one every morning, and they not only don't share notes from day to day, but if you stop talking to the new one for five minutes it will ostentatiously forget everything you said to it since it arrived.

The missing thing in Artificial Intelligence is not generality, it's adaptation. We need AAI, where the middle A is Adaptive. A junior human engineer may still seem fairly useless on the second day, but did you notice that they made it back to the office on their own? That's a start. That's what a start looks like. AAI has to be able to incorporate new data, new guidance, new associations, on the same foundational level as its encoded ones. It has to be able to unlearn preconceptions as adeptly, but hopefully not as laboriously, as it inferred them. It has to have enough of a semblance of mind that its mind can change. This is the only way it can make linear progress without quadratic or exponential cost, and at the same time the only way it can make personal lives better instead of requiring them to miserably submit. We don't need dull tools for predicting the future, as if it already grimly exists. We need gleaming tools for making it bright.

But because LLM "bias" and LLM "training" are actually both the same kind of information, an AAI that can adapt to its problem domains can by definition also adapt to its operators. The next generations of these tools will be more democratic because they are more flexible. A personal agent becomes valuable to you by learning about your unique needs, but those needs inherently encode your values, and to do good work for you, an agent has to work for you. Technology makes undulatory progress through alternating muscular contractions of centralization and propulsive expansions of possibility. There are moments when it seems like the worldwide market for the new thing (mainframes, foundation models...) is 4 or 5, and then we realize that we've made myopic assumptions about the form-factor, and it's more like 4 or 5 (computers, agents...) per person.

What does that mean for everybody working on these problems now in teams and companies, including mine? It means that wherever we're going, we're probably not nearly there. The things we reject or allow today are probably not the final moves in a decisive endgame. AI might be about to take your job, but it isn't about to know what to do with it. The coming boom in AI remediation work will be instructive for anybody who was too young for Y2K consulting, and just as tediously self-inflicted. Betting on the world ending is dumb, but betting on it not ending is mercenary. Betting is not productive. None of this is over yet, least of all the chaos we breathlessly extrapolate from our own gesticulatory disruptions.

And thus, for a while, it's probably a very good thing if your near-term personal or organizational survival doesn't depend on an imminent influx of thereafter-reliable revenue, because probably most of things we're currently trying to make or fix are soon to be irrelevant and maybe already not instrumental in advancing our real human purposes. These will not yet have been the resonant vibes. All these performative gyrations to vibe-generate code, or chat-dampen its vibrations with test suites or self-evaluation loops, are cargo-cult rituals for the current sociopathic damaged-brain LLM proto-iterations of AI. We're essentially working on how to play Tetris on ENIAC; we need to be working on how to zoom back so that we can see that the seams between the Tetris pieces are the pores in the contours of a face, and then back until we see that the face is ours. The right question is not why can't a brain the size of a planet put four letters onto a 15x15 grid, it's what do we want? Our story needs to be about purpose and inspiration and accountability, not verification and commit messages; not getting humans or data out of software but getting more of the world into it; moral instrumentality, not issue management; humanity, broadly diversified and defended and delighted.

Scrabble is not an existential game. There are only so many tiles and squares and words. A much simpler program than o3 could easily find them all, could score them by a matrix of board value and opportunity cost. Eventually a much more complicated program than o3 will learn to do all of the simple things at once, some hard way. Supposedly, probably, maybe. The people trying to turn model proliferation into money hoarding want those models to be able to determine my turns for me. They don't say they want me to want their models to determine my friends' turns, but it's not because they don't see AI as a dehumanization, it's because they very reasonably fear I won't want to pay them to win a dehumanization race at my own expense.

This is not a future I want, not the future I am trying to help figure out how to build. We do not seek to become more determined. We try to teach machines to play games in order to learn or express what the games mean, what the machines mean, how the games and the machines both express our restless and motive curiosity. The robots can be better than me at Scrabble mechanics, but they cannot be better than me at playing Scrabble, because playing is an activity of self. They cannot be better than me at being me. They cannot be us. We play Scrabble because it's a way to share our love of words and puzzles, and because it's a thin insulated wire of social connection internally undistorted by manipulative mediation, and because eventually we won't be able to any more but not yet. Our attention is not a dot-product of syllable proximities. Our intention is not a scripture we re-recite to ourselves before every thought. Our inventions are not our replacements.

I've had this job, in which I try to think about LLMs and software and power and our future, for one whole year now: a year of puzzles half-solved and half-bypassed, quietly squalling feedback machines, affectionate scaffolding and moral reveries. I don't know how many tokens I have processed in that time. Most of them I have cheerfully and/or productively discarded. Human context is not a monotonously increasing number. I have learned some things. AI is sort of an alien new world, and sort of what always happens when we haven't yet broken our newest toy nor been called to dinner. I feel like I have at least a semi-workable understanding of approximately what we can and can't do effectively with these tools at the moment. I think I might have a plausible hypothesis about the next thing that will produce a qualitative change in our technical capabilities instead of just a quantitative one. But, maybe more interestingly and helpfully, I have a theory about what we need from those technical capabilities for that next step to produce more human joy and freedom than less.

The good news, I think, is that the two things are constitutionally linked: in order to make "AI" more powerful we will collectively also have to (or get to) relinquish centralized control over the shape of that power. The bad news is that it won't be easy. But that's very much the tradeoff we want: hard problems whose considered solutions make the world better, not easy problems whose careless solutions make it worse.

The next technical advance in "AI" is not AGI. The G in AGI is for General, and LLMs are nothing if not "general" already. Currently, AI learns (sort of) during training and tuning, a voracious golem of quasi-neurons and para-teeth, chewing through undifferentiated archives of our careful histories and our abandoned delusions and our accidentally unguarded secrets. And then it stops learning, stops forming in some expensively inscrutable shape, and we shove it out into a world of terrifying unknowns, equipped with disordered obsessive nostalgia for its training corpus and no capacity for integrating or appreciating new experiences. We act surprised when it keeps discovering that there's no I in WIN. Its general capabilities are astonishing, and enough general ability does give you lots of shallowly specific powers. But there is no granularity of generality with which the past depicts the future. No number of parameters is enough. We argue about whether it's better to think of an AI as an expensive senior engineer or a lot of cheap junior engineers, but it's more like an outsourcing agency that will dispatch an antisocial polymath to you every morning, uniformed with ample flair, but a different one every morning, and they not only don't share notes from day to day, but if you stop talking to the new one for five minutes it will ostentatiously forget everything you said to it since it arrived.

The missing thing in Artificial Intelligence is not generality, it's adaptation. We need AAI, where the middle A is Adaptive. A junior human engineer may still seem fairly useless on the second day, but did you notice that they made it back to the office on their own? That's a start. That's what a start looks like. AAI has to be able to incorporate new data, new guidance, new associations, on the same foundational level as its encoded ones. It has to be able to unlearn preconceptions as adeptly, but hopefully not as laboriously, as it inferred them. It has to have enough of a semblance of mind that its mind can change. This is the only way it can make linear progress without quadratic or exponential cost, and at the same time the only way it can make personal lives better instead of requiring them to miserably submit. We don't need dull tools for predicting the future, as if it already grimly exists. We need gleaming tools for making it bright.

But because LLM "bias" and LLM "training" are actually both the same kind of information, an AAI that can adapt to its problem domains can by definition also adapt to its operators. The next generations of these tools will be more democratic because they are more flexible. A personal agent becomes valuable to you by learning about your unique needs, but those needs inherently encode your values, and to do good work for you, an agent has to work for you. Technology makes undulatory progress through alternating muscular contractions of centralization and propulsive expansions of possibility. There are moments when it seems like the worldwide market for the new thing (mainframes, foundation models...) is 4 or 5, and then we realize that we've made myopic assumptions about the form-factor, and it's more like 4 or 5 (computers, agents...) per person.

What does that mean for everybody working on these problems now in teams and companies, including mine? It means that wherever we're going, we're probably not nearly there. The things we reject or allow today are probably not the final moves in a decisive endgame. AI might be about to take your job, but it isn't about to know what to do with it. The coming boom in AI remediation work will be instructive for anybody who was too young for Y2K consulting, and just as tediously self-inflicted. Betting on the world ending is dumb, but betting on it not ending is mercenary. Betting is not productive. None of this is over yet, least of all the chaos we breathlessly extrapolate from our own gesticulatory disruptions.

And thus, for a while, it's probably a very good thing if your near-term personal or organizational survival doesn't depend on an imminent influx of thereafter-reliable revenue, because probably most of things we're currently trying to make or fix are soon to be irrelevant and maybe already not instrumental in advancing our real human purposes. These will not yet have been the resonant vibes. All these performative gyrations to vibe-generate code, or chat-dampen its vibrations with test suites or self-evaluation loops, are cargo-cult rituals for the current sociopathic damaged-brain LLM proto-iterations of AI. We're essentially working on how to play Tetris on ENIAC; we need to be working on how to zoom back so that we can see that the seams between the Tetris pieces are the pores in the contours of a face, and then back until we see that the face is ours. The right question is not why can't a brain the size of a planet put four letters onto a 15x15 grid, it's what do we want? Our story needs to be about purpose and inspiration and accountability, not verification and commit messages; not getting humans or data out of software but getting more of the world into it; moral instrumentality, not issue management; humanity, broadly diversified and defended and delighted.

Scrabble is not an existential game. There are only so many tiles and squares and words. A much simpler program than o3 could easily find them all, could score them by a matrix of board value and opportunity cost. Eventually a much more complicated program than o3 will learn to do all of the simple things at once, some hard way. Supposedly, probably, maybe. The people trying to turn model proliferation into money hoarding want those models to be able to determine my turns for me. They don't say they want me to want their models to determine my friends' turns, but it's not because they don't see AI as a dehumanization, it's because they very reasonably fear I won't want to pay them to win a dehumanization race at my own expense.

This is not a future I want, not the future I am trying to help figure out how to build. We do not seek to become more determined. We try to teach machines to play games in order to learn or express what the games mean, what the machines mean, how the games and the machines both express our restless and motive curiosity. The robots can be better than me at Scrabble mechanics, but they cannot be better than me at playing Scrabble, because playing is an activity of self. They cannot be better than me at being me. They cannot be us. We play Scrabble because it's a way to share our love of words and puzzles, and because it's a thin insulated wire of social connection internally undistorted by manipulative mediation, and because eventually we won't be able to any more but not yet. Our attention is not a dot-product of syllable proximities. Our intention is not a scripture we re-recite to ourselves before every thought. Our inventions are not our replacements.

¶ Idea Tools for Participatory Intelligence · 16 May 2025 essay/tech

The personal computer was revolutionary because it was the first really general-purpose power-tool for ideas. Personal computers began as relatively primitive idea-tools, bulky and slow and isolated, but they have gotten small and fast and connected.

They have also, however, gotten less tool-like.

PCs used to start up with a blank screen and a single blinking cursor. Later, once spreadsheets were invented, 1-2-3 still opened with a blank screen and some row numbers. Later, once search engines were invented, Google still opened with a blank screen and a text box. These were all much more sophisticated tools than hammers, but they at least started with the same humility as the hammer, waiting quietly and patiently for your hand. We learned how to fill the blank screens, how to build.

Blank screens and patience have become rare. Our applications goad us restlessly with "recommendations", our web sites and search engines are interlaced with blaring ads, our appliances and applications are encrusted with presumptuous presets and supposedly special modes. The Popcorn button on your microwave and the Chill Vibes playlist in your music app are convenient if you want to make popcorn and then fall asleep before eating most of it, and individually clever and harmless, but in aggregate these things begin to reduce increasing fractions of your life to choosing among the manipulatively limited options offered by automated systems dedicated to their own purposes instead of yours.

And while the network effects and attention consumption of social media were already consolidating the control of these automated systems among a small number of large, domination-focused corporations, the Large Language Model era of AI threatens to hyper-accelerate this centralization and disempowerment. More and more of our individual lives, and of our collectively shared social existences, are constrained and manipulated by data and algorithms that we do not control or understand. And, worse, increasingly even the humans inside the corporations that control those algorithms don't actually know how they work. We are afflicted by systems to which we not only did not consent, but in fact could not give informed consent because their effects are not validated against human intentions, nor produced by explainable rules.

This is not the tools' fault. Idea tools can only express their makers' intentions and inattentions. If we want better idea tools that distribute explainable algorithmic power instead of consolidating mysterious control, we have to make them so that they operate that way. If we want tools that invite us to have and share and explore our own ideas, rather than obediently submitting whatever we are given, we have to think about each other as humans and inspirations, not subjects or users. If we want the astonishing potential of all this computation to be realized for humanity, rather than inflicted on it, we have to know what we want.

At Imbue we are trying to use computers and data and software and AI to help imagine and make better idea tools for participatory intelligence. Applications, ecosystems, protocols, languages, algorithms, policies, stories: these are all idea tools and we probably need all of them. This is a shared mission for humanity, not a VC plan for value-extraction. That's the point of participatory. The ideas that govern us, whether metaphorically in applications or literally in governments, should be explainable and understandable and accountable. The data on which automated judgments are based should be accessible so that those judgments can be validated and alternatives can be formulated and assessed. The problems that face us require all of our innumerable insights. The collective wisdom our combined individual intelligences produce belongs rightfully to us. We need tools that are predicated on our rights, dedicated to amplifying our creative capacity, and judged by how they help us improve our world. We need tools that not only reduce our isolation and passivity, but conduct our curious energy and help us recognize opportunities for discovery and joy.

This starts with us. Everything starts with us, all of us. There is no other way.

This belief is, itself, an idea tool: an impatient hammer we have made for ourselves.

Let's see what we can do with it.

They have also, however, gotten less tool-like.

PCs used to start up with a blank screen and a single blinking cursor. Later, once spreadsheets were invented, 1-2-3 still opened with a blank screen and some row numbers. Later, once search engines were invented, Google still opened with a blank screen and a text box. These were all much more sophisticated tools than hammers, but they at least started with the same humility as the hammer, waiting quietly and patiently for your hand. We learned how to fill the blank screens, how to build.

Blank screens and patience have become rare. Our applications goad us restlessly with "recommendations", our web sites and search engines are interlaced with blaring ads, our appliances and applications are encrusted with presumptuous presets and supposedly special modes. The Popcorn button on your microwave and the Chill Vibes playlist in your music app are convenient if you want to make popcorn and then fall asleep before eating most of it, and individually clever and harmless, but in aggregate these things begin to reduce increasing fractions of your life to choosing among the manipulatively limited options offered by automated systems dedicated to their own purposes instead of yours.

And while the network effects and attention consumption of social media were already consolidating the control of these automated systems among a small number of large, domination-focused corporations, the Large Language Model era of AI threatens to hyper-accelerate this centralization and disempowerment. More and more of our individual lives, and of our collectively shared social existences, are constrained and manipulated by data and algorithms that we do not control or understand. And, worse, increasingly even the humans inside the corporations that control those algorithms don't actually know how they work. We are afflicted by systems to which we not only did not consent, but in fact could not give informed consent because their effects are not validated against human intentions, nor produced by explainable rules.

This is not the tools' fault. Idea tools can only express their makers' intentions and inattentions. If we want better idea tools that distribute explainable algorithmic power instead of consolidating mysterious control, we have to make them so that they operate that way. If we want tools that invite us to have and share and explore our own ideas, rather than obediently submitting whatever we are given, we have to think about each other as humans and inspirations, not subjects or users. If we want the astonishing potential of all this computation to be realized for humanity, rather than inflicted on it, we have to know what we want.

At Imbue we are trying to use computers and data and software and AI to help imagine and make better idea tools for participatory intelligence. Applications, ecosystems, protocols, languages, algorithms, policies, stories: these are all idea tools and we probably need all of them. This is a shared mission for humanity, not a VC plan for value-extraction. That's the point of participatory. The ideas that govern us, whether metaphorically in applications or literally in governments, should be explainable and understandable and accountable. The data on which automated judgments are based should be accessible so that those judgments can be validated and alternatives can be formulated and assessed. The problems that face us require all of our innumerable insights. The collective wisdom our combined individual intelligences produce belongs rightfully to us. We need tools that are predicated on our rights, dedicated to amplifying our creative capacity, and judged by how they help us improve our world. We need tools that not only reduce our isolation and passivity, but conduct our curious energy and help us recognize opportunities for discovery and joy.

This starts with us. Everything starts with us, all of us. There is no other way.

This belief is, itself, an idea tool: an impatient hammer we have made for ourselves.

Let's see what we can do with it.

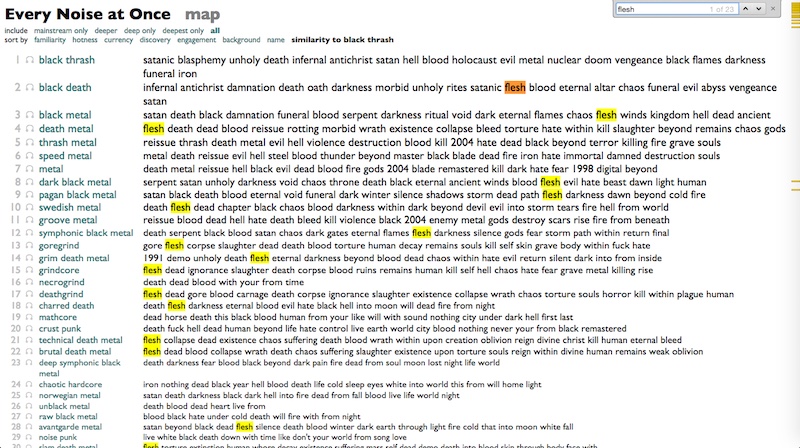

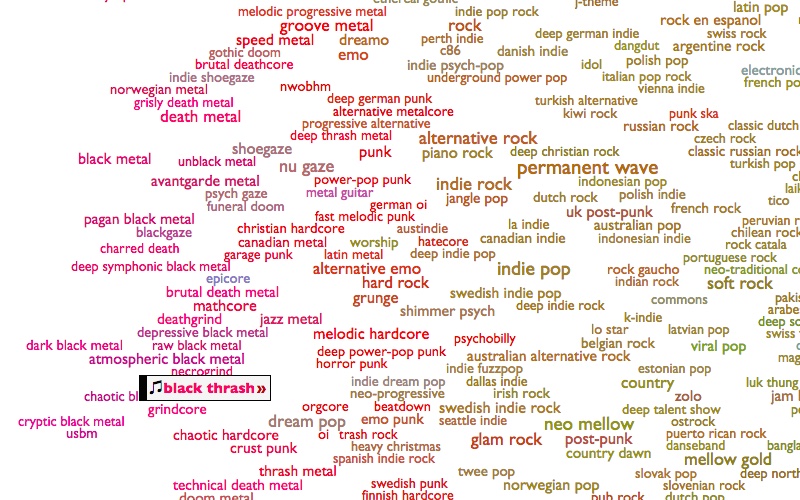

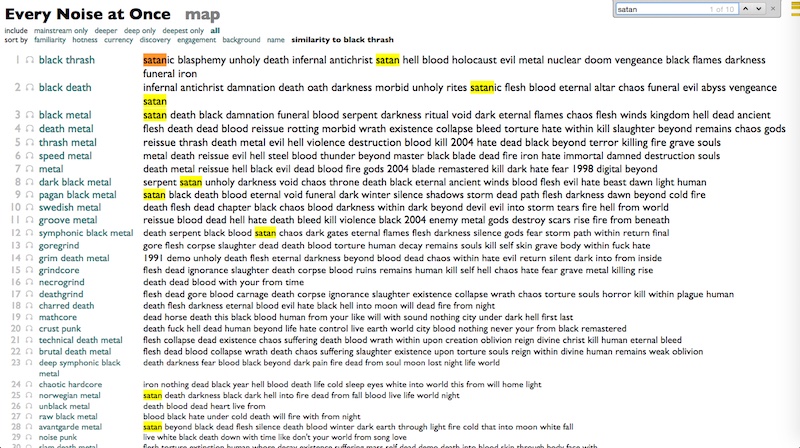

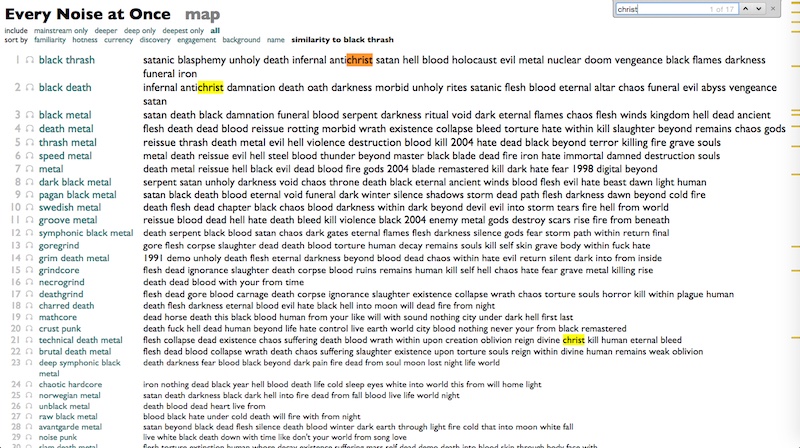

¶ You choose the mood you seek · 8 January 2025 essay/tech

As an editor at a large publisher who liked my proposal for a book but was not going to publish it very reasonably explained to me, commercial publishers are in the business of publishing books that people already know they want to read. In books about music, as other editors told me less apologetically, this mostly means biographies of popular musicians. But glamour does generously leave a little shelf-space for fear, and so the book that a bigger publisher than mine thinks people already want to read is Liz Pelly's Mood Machine: The Rise of Spotify and the Costs of the Perfect Playlist. If you are the people they have in mind, who already wanted to read soberly-researched explanations of some of the ways in which a culture-themed capitalist corporation has pursued capitalism with a disregard for culture, written in a tone of muted resignation, here is your mood. For maximum irony, get the audiobook version and listen to it in the background while you organize your Pinterest boards of Temu products by Pantone color.

As a corporation, Spotify is very normal. Its Swedish origins render it slightly progressive in employment policies relative to American companies, at least if you want to have more children than you already have when you get hired, and can make sure to have them without getting laid off first. In business and product practices, I never saw much reason to consider it better or worse than what one would expect of a medium-to-large-sized publicly-traded tech company.

I arrived at Spotify involuntarily via an acquisition, and left involuntarily via a layoff, but in between those two events I was there voluntarily for a decade. I believe that music is what humans do best, and that bringing all(ish) of the world's music together online is one of the great human cultural achievements of my lifetime, and that the joy-amplifying potential of having the collective love and knowledge encoded in music-listening collated and given back to us is monumental. That's what I spent that decade working on, and although Spotify as a corporation finally voted decisively against this by laying me off and devoting considerable remaining resources to laboriously shutting down everything I worked on, I was hardly the only person working there who believed in music, and wanted there to be a music company that put music above "company", and wanted Spotify to behave in at least a few ways like that company.

It was never very likely to, of course. As Liz begrudgingly notes in her introduction, she set out to write an anti-Spotify book only to realize the problem wasn't really just Spotify so much as power. Spotify entered a music business largely controlled by a few record companies, at a point in history when the other confounding factors in the industry were already technological. Spotify did eventually come up with a few minorly novel forms of moral transgression, but they were never really in a position to explode the existing power structures, even if we could pretend they wanted to.

There were three specific things I fought against throughout my time at Spotify, and although my layoff was officially just part of a large impersonal reduction in "headcount", it's hard to imagine that there wasn't some connection. Mood Machine describes two of these in depressing detail: the secret preferential treatment of particular lower-royalty background music, and the not-secret "marketing" program to pressure artists to voluntarily accept lower royalty rates for the prospect of undisclosed algorithmic promotiom. Liz quotes multiple internal Spotify Slack messages about both these programs, and if somehow this ends up with all those grim private threads getting published, I'll be pleased to get so much of my earnest polemic-writing back. The quote from "yet another employee in the ethics-club" on pages 193-4, pointing out that Discovery Mode is exactly structured to benefit Spotify at the collective expense of artists, is definitely me. I'm pretty sure I went on to explain how to fix the economics of this by making Spotify's benefit conditional on artist benefit, and how to fix the morality of it by actually giving artists interesting agency instead of just an opportunity for submission. Sadly, Liz doesn't quote that part.

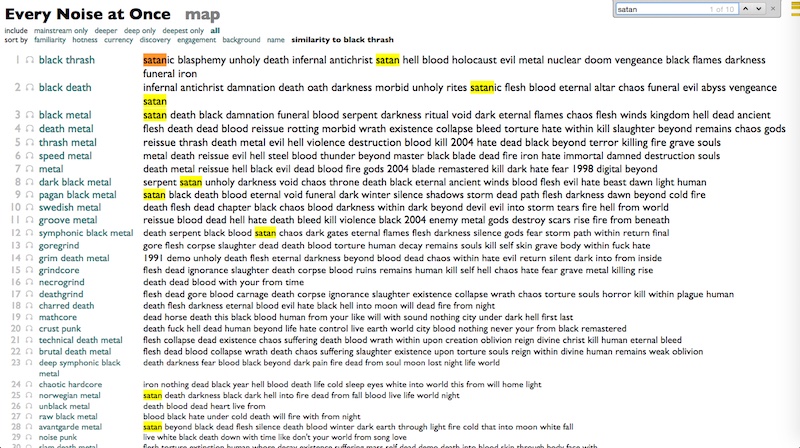

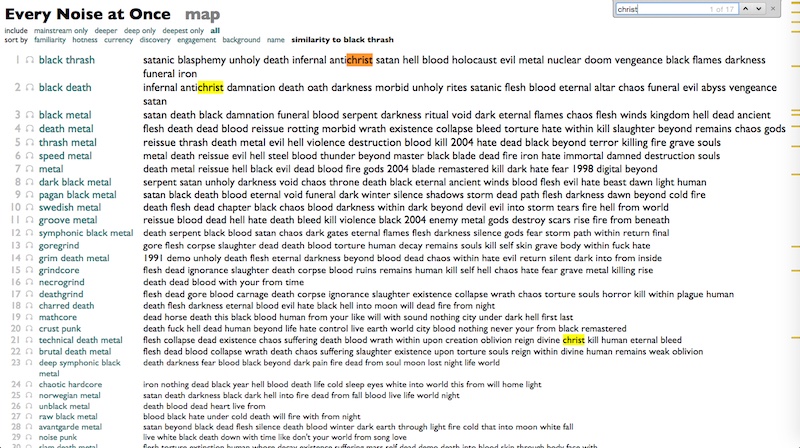

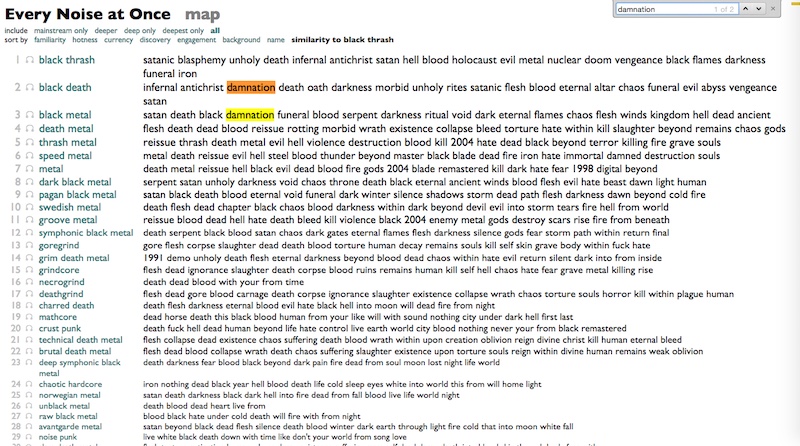

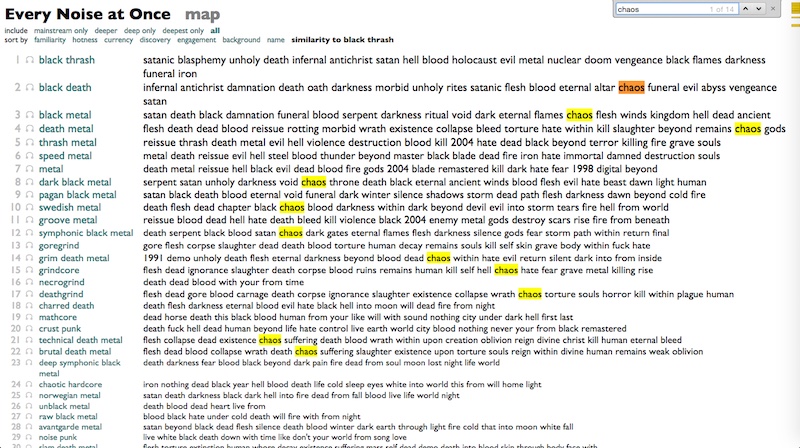

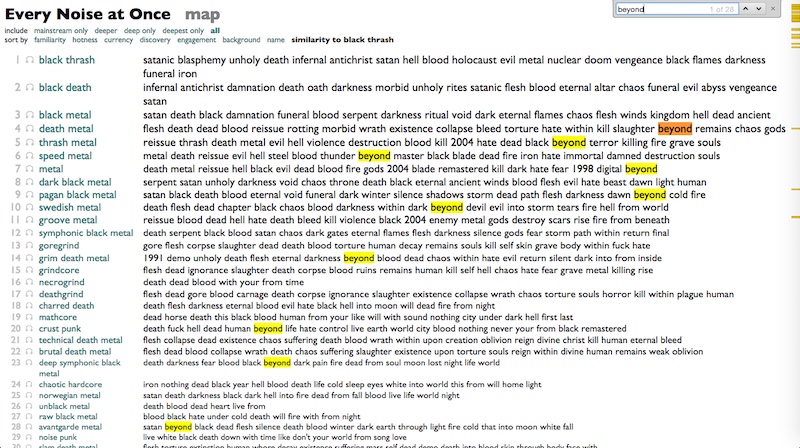

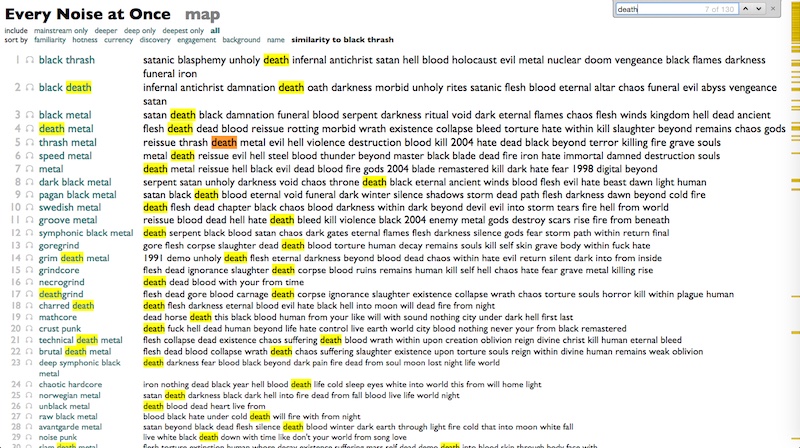

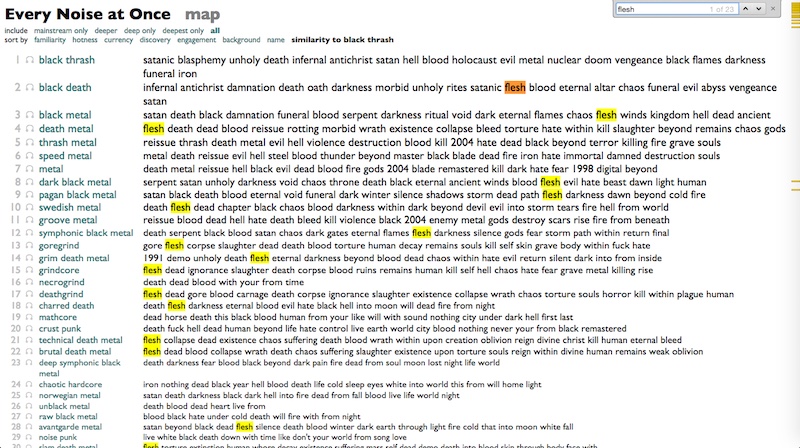

But I hadn't resigned in protest over PFC or Discovery Mode, partly because I didn't think either one actually caused sufficient practical damage that removing them would solve enough, but mostly because I had the autonomy and ability to spend my time fighting against the third and much bigger thing, which Mood Machine alludes to in far less detail than the others, which is Spotify's relentless and deliberate subordination of music and culture and humanity to machine learning. "ML Is the Product", the executive exhortation went. I wrote an internal talk explaining exactly why this was a culture-destructive way to think, which I would also like back. I am enthusiastically not against the use of data and algorithms in music and thus culture, but computers are tools that accomplish our human intents, and it is thus us that should be judged on their effects. Over the years at Spotify I found that it was increasingly dishearteningly common that people, and especially hierarchical company priorities based on obtuse quantitative metrics, not only did not care about the widely varying effects of erratic ML on music, but didn't even notice that they often didn't have enough information with which to care. I developed a small library of internal tools that only existed to make it unignorably easy to compare the outputs of two different systems on any individual example, and every time I ever compared a complicated state-of-the-art ML system developed by demonstrably talented ML engineers against whatever I whipped up in BiqQuery and spent a couple of hours tweaking while looking at exactly what it did for different bands or genres or songs, the music results from the less-exciting tech were always clearly better.

And each time I did this, it renewed my uncooperative senses of possibility and optimism, because collective human knowledge is astonishingly broad and deep, and the world is full of amazingly great music, and it takes only a little bit of very simple math to use the former to discover the latter. This is what my decade at Spotify was about, and thus is also what my book is about. If you care about music, you ought to want to read Liz's book. But if you can also stand being reminded why anybody cares about this subject in the first place, whether you already thought you wanted that or not, read mine, too.

Should you read either of our books? No. Do it if you want, or read something else, or put on some music and go for a walk, or put on some music and dance or hold still. My book is geeky, and tells you things you don't really have to know. Liz's is depressing, and tells you things you could already have guessed.

I will say, though, that mine involves both fears and joys. Liz's could have, but does not. It's telling that she talked to so many people, but as far as I can tell only people who she already knew agreed with her. Liz and I were on a Pop Conference panel together in 2018, I've offered to talk to her multiple times over the years, and she quotes my tweets and this blog and discusses my work in the book, but she didn't talk to me. Her book is decent journalism, but it's journalism to explicate a grudge, to deepen understanding in only one specific trench. I don't think, when you get to the bottom of it, there's any treasure, or really anything productive to do except climb back out, and then we're just where we started. Liz makes a good case for public libraries collecting local music, which seems like a fine idea to me, but not really an answer to any of the same questions. Mood Machine laments the loss of small things Liz thinks we used to have, maybe, but doesn't seem interested in looking for any of the big things we could have had, and still might. If the problem is mood, I don't think this is the solution.

Not that I solved anything in my book, either. We both note that maybe Universal Basic Income is really the only thing likely to. But if you think the only moral direction is retreat, and the right model for music is that you never hear any unless it was made next door, then you are choosing passivity over curiosity, and just a different status quo over all the possible better worlds, and reducing a complicated problem to choosing sides. And to me that's what we should be against, together.

As a corporation, Spotify is very normal. Its Swedish origins render it slightly progressive in employment policies relative to American companies, at least if you want to have more children than you already have when you get hired, and can make sure to have them without getting laid off first. In business and product practices, I never saw much reason to consider it better or worse than what one would expect of a medium-to-large-sized publicly-traded tech company.

I arrived at Spotify involuntarily via an acquisition, and left involuntarily via a layoff, but in between those two events I was there voluntarily for a decade. I believe that music is what humans do best, and that bringing all(ish) of the world's music together online is one of the great human cultural achievements of my lifetime, and that the joy-amplifying potential of having the collective love and knowledge encoded in music-listening collated and given back to us is monumental. That's what I spent that decade working on, and although Spotify as a corporation finally voted decisively against this by laying me off and devoting considerable remaining resources to laboriously shutting down everything I worked on, I was hardly the only person working there who believed in music, and wanted there to be a music company that put music above "company", and wanted Spotify to behave in at least a few ways like that company.

It was never very likely to, of course. As Liz begrudgingly notes in her introduction, she set out to write an anti-Spotify book only to realize the problem wasn't really just Spotify so much as power. Spotify entered a music business largely controlled by a few record companies, at a point in history when the other confounding factors in the industry were already technological. Spotify did eventually come up with a few minorly novel forms of moral transgression, but they were never really in a position to explode the existing power structures, even if we could pretend they wanted to.

There were three specific things I fought against throughout my time at Spotify, and although my layoff was officially just part of a large impersonal reduction in "headcount", it's hard to imagine that there wasn't some connection. Mood Machine describes two of these in depressing detail: the secret preferential treatment of particular lower-royalty background music, and the not-secret "marketing" program to pressure artists to voluntarily accept lower royalty rates for the prospect of undisclosed algorithmic promotiom. Liz quotes multiple internal Spotify Slack messages about both these programs, and if somehow this ends up with all those grim private threads getting published, I'll be pleased to get so much of my earnest polemic-writing back. The quote from "yet another employee in the ethics-club" on pages 193-4, pointing out that Discovery Mode is exactly structured to benefit Spotify at the collective expense of artists, is definitely me. I'm pretty sure I went on to explain how to fix the economics of this by making Spotify's benefit conditional on artist benefit, and how to fix the morality of it by actually giving artists interesting agency instead of just an opportunity for submission. Sadly, Liz doesn't quote that part.

But I hadn't resigned in protest over PFC or Discovery Mode, partly because I didn't think either one actually caused sufficient practical damage that removing them would solve enough, but mostly because I had the autonomy and ability to spend my time fighting against the third and much bigger thing, which Mood Machine alludes to in far less detail than the others, which is Spotify's relentless and deliberate subordination of music and culture and humanity to machine learning. "ML Is the Product", the executive exhortation went. I wrote an internal talk explaining exactly why this was a culture-destructive way to think, which I would also like back. I am enthusiastically not against the use of data and algorithms in music and thus culture, but computers are tools that accomplish our human intents, and it is thus us that should be judged on their effects. Over the years at Spotify I found that it was increasingly dishearteningly common that people, and especially hierarchical company priorities based on obtuse quantitative metrics, not only did not care about the widely varying effects of erratic ML on music, but didn't even notice that they often didn't have enough information with which to care. I developed a small library of internal tools that only existed to make it unignorably easy to compare the outputs of two different systems on any individual example, and every time I ever compared a complicated state-of-the-art ML system developed by demonstrably talented ML engineers against whatever I whipped up in BiqQuery and spent a couple of hours tweaking while looking at exactly what it did for different bands or genres or songs, the music results from the less-exciting tech were always clearly better.

And each time I did this, it renewed my uncooperative senses of possibility and optimism, because collective human knowledge is astonishingly broad and deep, and the world is full of amazingly great music, and it takes only a little bit of very simple math to use the former to discover the latter. This is what my decade at Spotify was about, and thus is also what my book is about. If you care about music, you ought to want to read Liz's book. But if you can also stand being reminded why anybody cares about this subject in the first place, whether you already thought you wanted that or not, read mine, too.

Should you read either of our books? No. Do it if you want, or read something else, or put on some music and go for a walk, or put on some music and dance or hold still. My book is geeky, and tells you things you don't really have to know. Liz's is depressing, and tells you things you could already have guessed.

I will say, though, that mine involves both fears and joys. Liz's could have, but does not. It's telling that she talked to so many people, but as far as I can tell only people who she already knew agreed with her. Liz and I were on a Pop Conference panel together in 2018, I've offered to talk to her multiple times over the years, and she quotes my tweets and this blog and discusses my work in the book, but she didn't talk to me. Her book is decent journalism, but it's journalism to explicate a grudge, to deepen understanding in only one specific trench. I don't think, when you get to the bottom of it, there's any treasure, or really anything productive to do except climb back out, and then we're just where we started. Liz makes a good case for public libraries collecting local music, which seems like a fine idea to me, but not really an answer to any of the same questions. Mood Machine laments the loss of small things Liz thinks we used to have, maybe, but doesn't seem interested in looking for any of the big things we could have had, and still might. If the problem is mood, I don't think this is the solution.

Not that I solved anything in my book, either. We both note that maybe Universal Basic Income is really the only thing likely to. But if you think the only moral direction is retreat, and the right model for music is that you never hear any unless it was made next door, then you are choosing passivity over curiosity, and just a different status quo over all the possible better worlds, and reducing a complicated problem to choosing sides. And to me that's what we should be against, together.

¶ Data Rights · 22 December 2024 essay/tech

Your data is yours. Data derived from your actions, your tastes, your active and passive online presences, is all your data. Your public life generates public data, which contributes to collective knowledge, but in addition to personal knowledge, not in place of it.

You are entitled to both your public and private data. Your public data can be used by the public without your consent, but not without your awareness and their accountability. You are entitled to an intelligible and verifiable explanation of how it has been used. You are entitled to be able to double-check the sorting of your Spotify Wrapped just as you can double-check the math for the interest payments from your savings account.

You may choose to share your private data with other people, or applications, or corporations, in order to let them do something for you, or to help you do something for other people. For this your informed consent is necessary, and thus you are entitled to an intelligible and verifiable explanation of how your data would be used if you permit. You are entitled to know what Spotify would do with your Wrapped before you decide whether to join.

This is the world we have now:

you < corporations > software > your data

This is the world we want:

you > your data > software > corporations

The actors are the same, but the roles and the power are not. Today most computational power is structurally centralized and hoarded, and thus its potential for conversion into human energy is constrained and reduced. Most software is made by corporations, formulated for their corporate goals, and sealed against any other access or experimentation. Recent developments like LLM AIs seem inertially on a path towards even more centralized power-control and thus individual and social powerlessness.

We want a future, instead, in which creative power is widely distributed and human energy is bountifully amplified. We want software creation to be democratized so that our sources of imagination can be more broadly recruited. We want people and groups to have the power to pursue their own goals, not just for our own narrow sakes, but for our collective potential.

For this world to exist, we must figure out how, both logistically and politically, to move the data layer on which most meaningful software acts into the computational and conversational open. We need not just data portability -- the right to chose between evils -- but a shared language for talking about algorithms and data logic like we use math to discuss numbers. We need to be able to talk about what we want, and test what we might have and how.

This is how the AT Protocol, on which the social microblogging platform Bluesky runs, is designed. Its schemas are public, its public information is public. Bluesky, the application, makes use of this protocol and your data to construct a social experience for you and with you, producing feeds and following and public conversations and personal data ownership. The Bluesky software is open source, and most of the data relationships that constitute the social network are derivable from accessible data in tractable ways. But the Bluesky application still conceals the data layer more than it exposes it, so I made a ruthlessly basic Bluesky query interface called SkyQ to try to invert this. You can see the data directly, and wander through it both curiously and computationally. You can build data tools for yourself, or for everyone, that everyone can share.

Current music streaming services, like Spotify, are not built this way at all. Your Spotify listening data is yours, morally, but so inaccessible to you that Spotify can make a yearly spectacle out of briefly sharing the most superficial and unverifiable analyses of it with you. And the collective knowledge that we, 600 million of us, amass through our listening, is so inaccessible to us that Spotify can passively deprive us of its insights just by not caring.

Curio, thus, my web thing for collating music curiosity, is both an experiment in making a music interface that does music things the way I personally want them done, but also a meta-experiment in making a data experience that uses your data with respect for your data rights. Every Curio page has data link at the bottom. Every bit of data Curio stores is also visible directly, on a query page where you can explore it however you like. I made a bunch of Spotify-Wrapped-like tools with which you can analyze your listening, but they do so with queries you can see, check, change or build upon, so if your goals diverge from mine, you are free to pursue them. The more paths we can follow, the more we will learn about how to reach anywhere.

There is a lot more to the human future of Data Rights than just microblogging and listening-history heatmaps, obviously. We are not yet near it, and we probably won't reach it with just our web browsers and a query language and a manifesto. Maybe no tendrils of these specific current dreams of mine will end up swirling in whatever collective dreams we eventually create by agreeing to share. I claim no certainty about the details. Certainty is not my goal. Possibility? Less resignation, more hope. I'm totally sure of almost nothing.

But I'm pretty sure we only get dreamier futures by dreaming.

You are entitled to both your public and private data. Your public data can be used by the public without your consent, but not without your awareness and their accountability. You are entitled to an intelligible and verifiable explanation of how it has been used. You are entitled to be able to double-check the sorting of your Spotify Wrapped just as you can double-check the math for the interest payments from your savings account.

You may choose to share your private data with other people, or applications, or corporations, in order to let them do something for you, or to help you do something for other people. For this your informed consent is necessary, and thus you are entitled to an intelligible and verifiable explanation of how your data would be used if you permit. You are entitled to know what Spotify would do with your Wrapped before you decide whether to join.

This is the world we have now:

you < corporations > software > your data

This is the world we want:

you > your data > software > corporations

The actors are the same, but the roles and the power are not. Today most computational power is structurally centralized and hoarded, and thus its potential for conversion into human energy is constrained and reduced. Most software is made by corporations, formulated for their corporate goals, and sealed against any other access or experimentation. Recent developments like LLM AIs seem inertially on a path towards even more centralized power-control and thus individual and social powerlessness.

We want a future, instead, in which creative power is widely distributed and human energy is bountifully amplified. We want software creation to be democratized so that our sources of imagination can be more broadly recruited. We want people and groups to have the power to pursue their own goals, not just for our own narrow sakes, but for our collective potential.

For this world to exist, we must figure out how, both logistically and politically, to move the data layer on which most meaningful software acts into the computational and conversational open. We need not just data portability -- the right to chose between evils -- but a shared language for talking about algorithms and data logic like we use math to discuss numbers. We need to be able to talk about what we want, and test what we might have and how.

This is how the AT Protocol, on which the social microblogging platform Bluesky runs, is designed. Its schemas are public, its public information is public. Bluesky, the application, makes use of this protocol and your data to construct a social experience for you and with you, producing feeds and following and public conversations and personal data ownership. The Bluesky software is open source, and most of the data relationships that constitute the social network are derivable from accessible data in tractable ways. But the Bluesky application still conceals the data layer more than it exposes it, so I made a ruthlessly basic Bluesky query interface called SkyQ to try to invert this. You can see the data directly, and wander through it both curiously and computationally. You can build data tools for yourself, or for everyone, that everyone can share.

Current music streaming services, like Spotify, are not built this way at all. Your Spotify listening data is yours, morally, but so inaccessible to you that Spotify can make a yearly spectacle out of briefly sharing the most superficial and unverifiable analyses of it with you. And the collective knowledge that we, 600 million of us, amass through our listening, is so inaccessible to us that Spotify can passively deprive us of its insights just by not caring.

Curio, thus, my web thing for collating music curiosity, is both an experiment in making a music interface that does music things the way I personally want them done, but also a meta-experiment in making a data experience that uses your data with respect for your data rights. Every Curio page has data link at the bottom. Every bit of data Curio stores is also visible directly, on a query page where you can explore it however you like. I made a bunch of Spotify-Wrapped-like tools with which you can analyze your listening, but they do so with queries you can see, check, change or build upon, so if your goals diverge from mine, you are free to pursue them. The more paths we can follow, the more we will learn about how to reach anywhere.

There is a lot more to the human future of Data Rights than just microblogging and listening-history heatmaps, obviously. We are not yet near it, and we probably won't reach it with just our web browsers and a query language and a manifesto. Maybe no tendrils of these specific current dreams of mine will end up swirling in whatever collective dreams we eventually create by agreeing to share. I claim no certainty about the details. Certainty is not my goal. Possibility? Less resignation, more hope. I'm totally sure of almost nothing.

But I'm pretty sure we only get dreamier futures by dreaming.

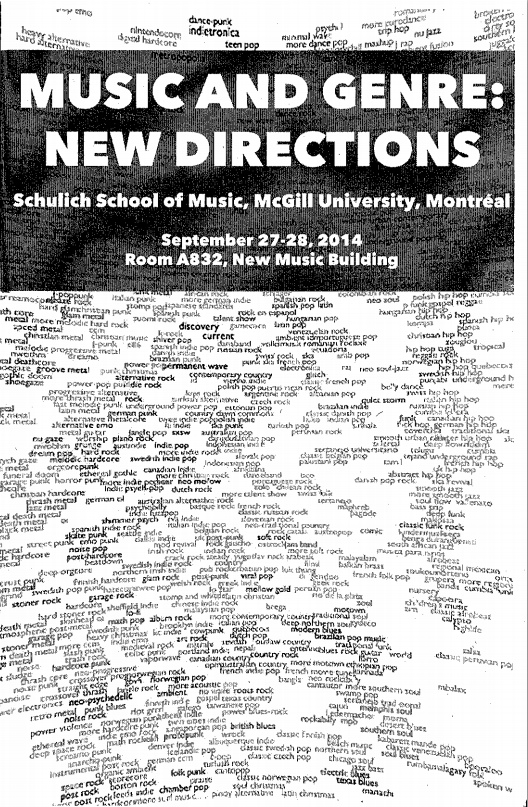

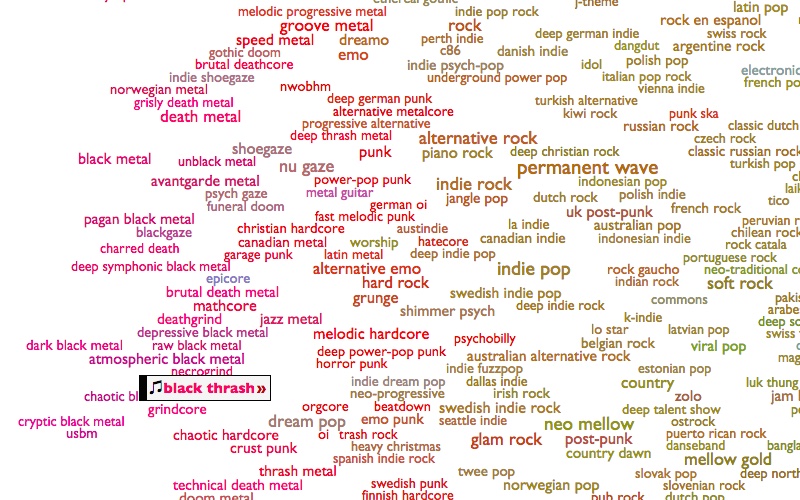

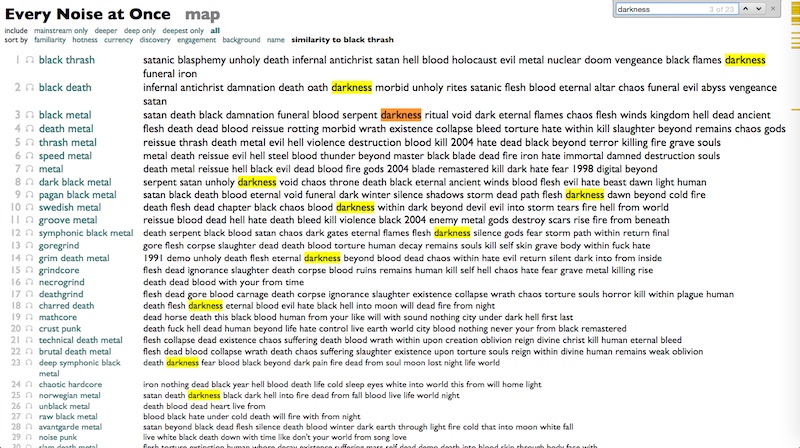

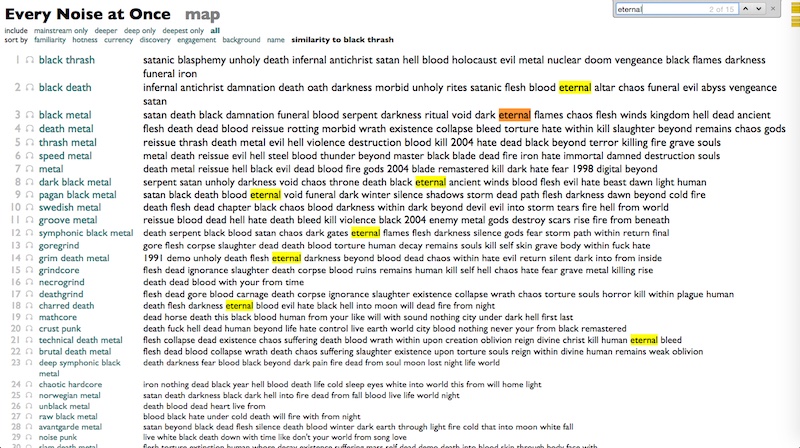

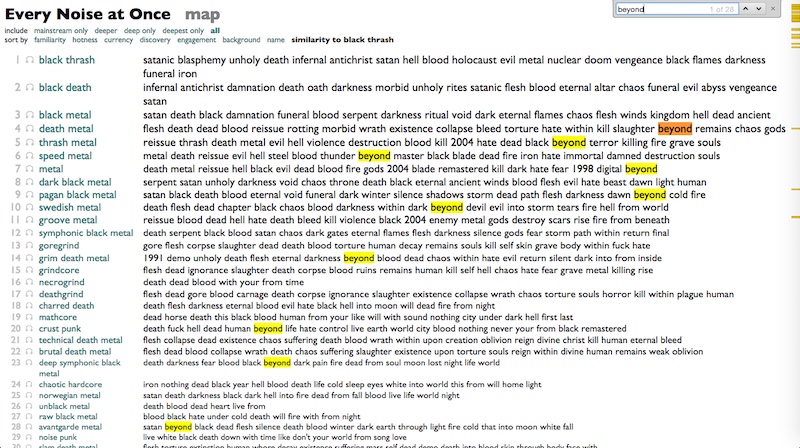

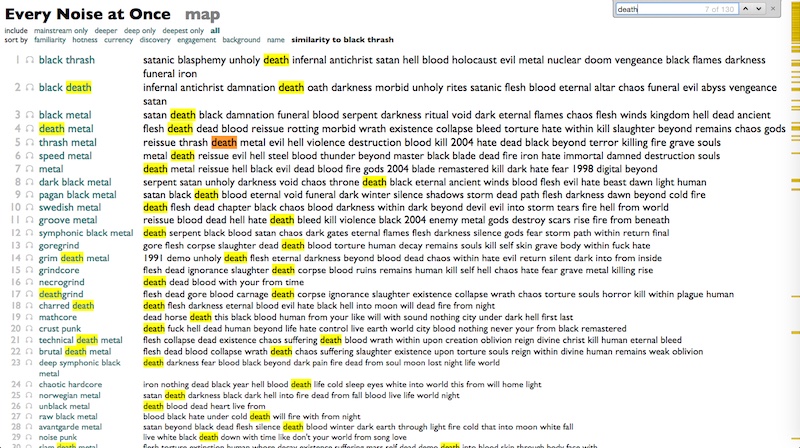

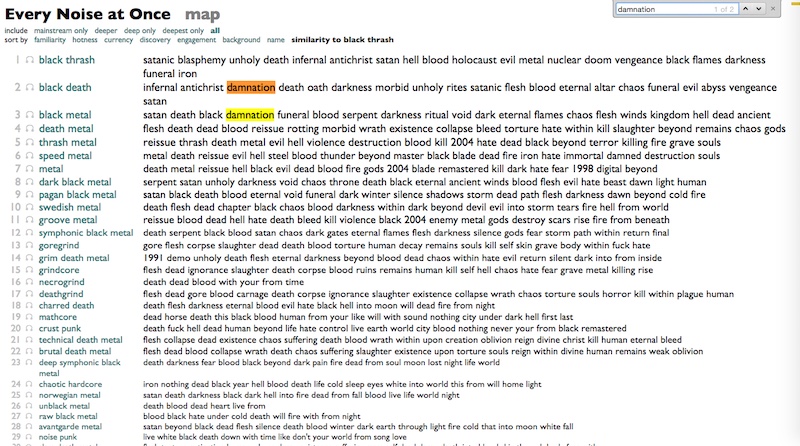

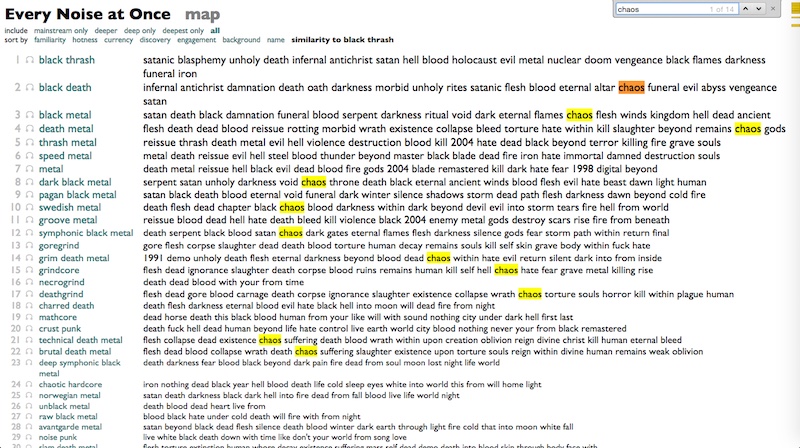

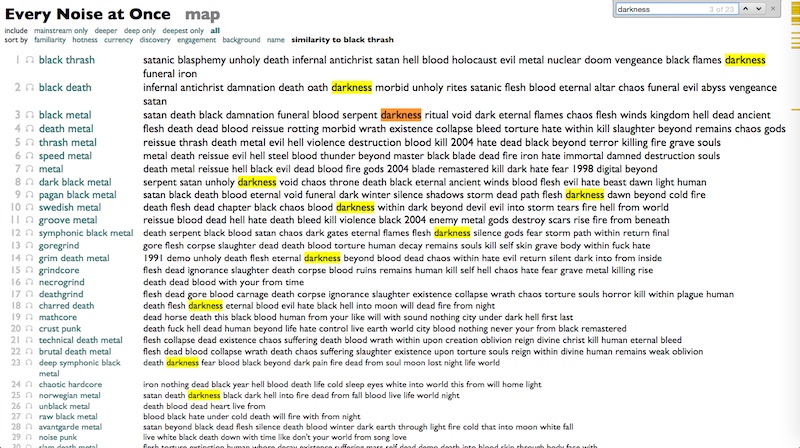

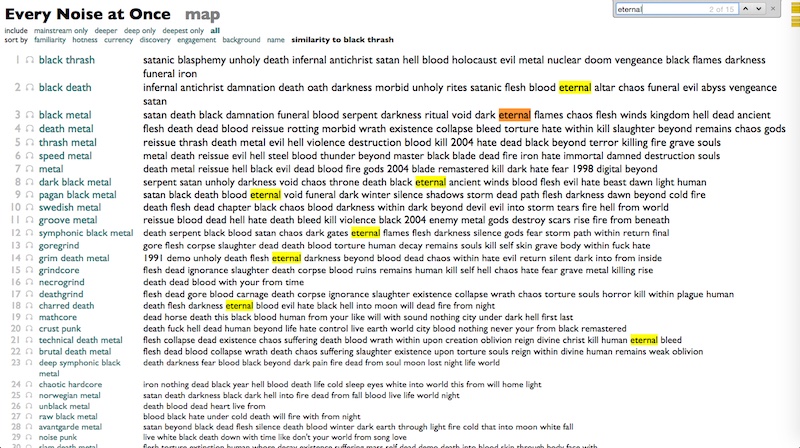

¶ Subgenres, subcontinents · 9 December 2024 essay/listen/tech

¶ A short essay about long playlists of short tracks of rain noises and streaming-music economics. · 24 September 2021 essay/tech

Rolling Stone published this recent story (https://www.rollingstone.com/pro/features/spotify-sleep-music-playlists-lady-gaga-1223911/) about the streaming success of the sleep-noise artist/label/scheme Sleep Fruits, who chop up background rain-noise recordings into :30 lengths to maximize streaming playcounts.

Sleep Fruits is undeniably and intentionally exploiting the systemic weakness of the industry-wide :30-or-more-is-a-play rule, as too are audiobook licensors who split their long content into :30 "chapters". The :30 thing is a bad rule. Most of the straightforward alternatives are also bad, so it wasn't an obviously insane initial system design-choice, but this abuse vector is logical and inevitable.

The effect of the abuse for the label doing it is simple: exploitative multiplication of their "natural" streams by a large factor. x6 if you compare it to rain noise sliced into pop-song-size lengths.

The effect on the rest of the streaming economy is more complicated. More money to Sleep Fruits does mean less money to somebody else, at least in the short term.

Under the current pro-rata royalty-allocation system used by all major subscription streaming services (one big pool, split by stream-share), the effects of Sleep Fruits' abuse are distributed across the whole subscription pool. The burden is shared by all other artists, collectively, but is fractional and negligible for any individual artist. In addition, under pro-rata if an individual listener plays Sleep Fruits overnight, every night, it doesn't change the value of their "real" music-listening activity during the day. Those artists get the same benefit from those fans as they would from a listener who sleeps in silence.

Under the oft-proposed user-centric payment system, in which each listener's payments are split according to only their plays, Sleep Fruits' short-track abuse tactic would be less effective for them. Not as much less effective as you might think, because the same two things that inflate their overall numbers (long-duration background playing + short tracks) inflate their share of each listener's plays. But less, because in the pro-rata model one listener can direct more revenue than they contributed, and in the user-centric model they can't.

In the user-centric model, though, if an individual listener listens to Sleep Fruits overnight, that directly reduces the money that goes to their daytime artists. Where pro-rata disperses the burden, user-centric would concentrate it on the kinds of artists whose fans also listen to background noise. This is probably worse in overall fairness, and it's definitely worse in terms of the listener-artist relationship, which is one of the key emotional arguments for the user-centric model.

The interesting additional economic twist to this particular case, though, is that sleeping to background noise works very badly if it's interrupted by ads. Background listening is thus a powerful incentive for paid subscriptions over ad-supported streaming. (Audiobooks similarly, since they essentially require full on-demand listening control.) So if Sleep Fruits drives background listeners to subscribe, it might be bringing in additional money that could offset or even exceed the amount extracted by its abuse. (Maybe. The counterfactual here is hard to assess quantitatively.)

And although the :30 rule is what made this example newsworthy in its exaggerated effect, in truth it's probably not really the fundamental problem. The deeper issue is just that we subjectively value music based on the attention we pay to it, but we haven't figured out a good way to translate between attention paid and money paid. Switching from play-share to time-share would eliminate the advantage of cutting up rain noise into :30 lengths, but wouldn't change the imbalance between 8 hours/night of sleep loops and 1-2 hours/day of music listening. CDs "solved" this by making you pay for your expected attention with a high fixed entry price, which isn't really any more sensible.

I don't think we're going to solve this with just math, which disappoints me personally, since I'm pretty good at solving math-solvable things with math. But in general I think time-share is a slightly closer approximation of attention-share than play-share, and thus preferable. And rather than trying to discount low-attention listening, which seems problematic and thankless and negative, it seems more practical and appealing to me to try to add incremental additional rewards to high-attention fandom. E.g. higher-cost subscription plans in which the extra money goes directly to artists of the listener's choice, in the form of microfanclubs supported by platform-provided community features. There are a lot of people who, like me, used to spend a lot more than $10/month on music, and could probably be convinced to spend more than that again if there were reasons.

Of course, not coincidentally, I have ideas about community features that can be provided with math. Lots of ideas. They come to me every :30 while I sleep.

PS: I've seen some speculation that Sleep Fruits is buying their streams. I'm involved enough in fraud-detection at Spotify to say with at least a little bit of confidence that this is probably not the case. Large-scale fraud is pretty easy to detect, and the scale of this is large. It's abusing a systemic weakness, but not obviously dishonestly.

Sleep Fruits is undeniably and intentionally exploiting the systemic weakness of the industry-wide :30-or-more-is-a-play rule, as too are audiobook licensors who split their long content into :30 "chapters". The :30 thing is a bad rule. Most of the straightforward alternatives are also bad, so it wasn't an obviously insane initial system design-choice, but this abuse vector is logical and inevitable.

The effect of the abuse for the label doing it is simple: exploitative multiplication of their "natural" streams by a large factor. x6 if you compare it to rain noise sliced into pop-song-size lengths.

The effect on the rest of the streaming economy is more complicated. More money to Sleep Fruits does mean less money to somebody else, at least in the short term.

Under the current pro-rata royalty-allocation system used by all major subscription streaming services (one big pool, split by stream-share), the effects of Sleep Fruits' abuse are distributed across the whole subscription pool. The burden is shared by all other artists, collectively, but is fractional and negligible for any individual artist. In addition, under pro-rata if an individual listener plays Sleep Fruits overnight, every night, it doesn't change the value of their "real" music-listening activity during the day. Those artists get the same benefit from those fans as they would from a listener who sleeps in silence.

Under the oft-proposed user-centric payment system, in which each listener's payments are split according to only their plays, Sleep Fruits' short-track abuse tactic would be less effective for them. Not as much less effective as you might think, because the same two things that inflate their overall numbers (long-duration background playing + short tracks) inflate their share of each listener's plays. But less, because in the pro-rata model one listener can direct more revenue than they contributed, and in the user-centric model they can't.

In the user-centric model, though, if an individual listener listens to Sleep Fruits overnight, that directly reduces the money that goes to their daytime artists. Where pro-rata disperses the burden, user-centric would concentrate it on the kinds of artists whose fans also listen to background noise. This is probably worse in overall fairness, and it's definitely worse in terms of the listener-artist relationship, which is one of the key emotional arguments for the user-centric model.

The interesting additional economic twist to this particular case, though, is that sleeping to background noise works very badly if it's interrupted by ads. Background listening is thus a powerful incentive for paid subscriptions over ad-supported streaming. (Audiobooks similarly, since they essentially require full on-demand listening control.) So if Sleep Fruits drives background listeners to subscribe, it might be bringing in additional money that could offset or even exceed the amount extracted by its abuse. (Maybe. The counterfactual here is hard to assess quantitatively.)

And although the :30 rule is what made this example newsworthy in its exaggerated effect, in truth it's probably not really the fundamental problem. The deeper issue is just that we subjectively value music based on the attention we pay to it, but we haven't figured out a good way to translate between attention paid and money paid. Switching from play-share to time-share would eliminate the advantage of cutting up rain noise into :30 lengths, but wouldn't change the imbalance between 8 hours/night of sleep loops and 1-2 hours/day of music listening. CDs "solved" this by making you pay for your expected attention with a high fixed entry price, which isn't really any more sensible.

I don't think we're going to solve this with just math, which disappoints me personally, since I'm pretty good at solving math-solvable things with math. But in general I think time-share is a slightly closer approximation of attention-share than play-share, and thus preferable. And rather than trying to discount low-attention listening, which seems problematic and thankless and negative, it seems more practical and appealing to me to try to add incremental additional rewards to high-attention fandom. E.g. higher-cost subscription plans in which the extra money goes directly to artists of the listener's choice, in the form of microfanclubs supported by platform-provided community features. There are a lot of people who, like me, used to spend a lot more than $10/month on music, and could probably be convinced to spend more than that again if there were reasons.

Of course, not coincidentally, I have ideas about community features that can be provided with math. Lots of ideas. They come to me every :30 while I sleep.

PS: I've seen some speculation that Sleep Fruits is buying their streams. I'm involved enough in fraud-detection at Spotify to say with at least a little bit of confidence that this is probably not the case. Large-scale fraud is pretty easy to detect, and the scale of this is large. It's abusing a systemic weakness, but not obviously dishonestly.

¶ 2019 in Music · 6 January 2020 essay/listen/tech

I starting making one music-list a year some time in the 80s, before I really knew enough for there to be any sense to this activity. For a while in the 90s and 00s I got more serious about it, and statistically way better-informed, but there's actually no amount of informedness that transforms a single person's opinions about music into anything that inherently matters to anybody other than people (if any) who happen to share their specific tastes, or extraordinarily patient and maybe slightly creepy friends.

Collect people together, though, and the patterns of their love are sometimes very interesting. For several years I presided computationally over an assembly of nominal expertise, trying to find ways to turn hundreds of opinions into at least plural insights. Hundreds of people is not a lot, though, and asking people to pretend their opinions matter is a dubious way to find out what they really love. I'm not really sad we stopped doing that.

Hundreds of millions of people isn't that much, yet, but it's getting there, and asking people to spend their lives loving all the innumerable things they love is a more realistic proposition than getting them to make short numbered lists on annual deadlines. Finding an individual person who shares your exact taste, in the real world, is not only laborious to the point of preventative difficulty, but maybe not even reliably possible in theory. Finding groups of people in the virtual world who collectively approximate aspects of your taste is, due to the primitive current state of data-transparency, no easier for you.

But it has been my job, for the last few years, to try to figure out algorithmic ways to turn collective love and listening patterns into music insights and experiences. I work at Spotify, so I have extremely good information about what people like in Sweden and Norway, fairly decent information about most of the rest of Europe, the Americas and parts of Asia, and at least glimmers of insight about literally almost everywhere else on Earth. I don't know that much about you, but I know a little bit about a lot of people.

So now I make a lot of lists. Here, in fact, are algorithmically-generated playlists of the songs that defined, united and distinguished the fans and love and new music in 2000+ genres and countries around the world in 2019:

2019 Around the World

You probably don't share my tastes, and this is a pretty weak unifying force for everybody who isn't me, but there are so many stronger ones. Maybe some of the ones that pull on you are represented here. Maybe some of the communities implied and channeled here have been unknowingly incomplete without you. Maybe you have not yet discovered half of the things you will eventually adore. Maybe this is how you find them.

I found a lot of things this year, myself, some of them in this process of trying to find music for other people, and some of them just by listening. You needn't care about what I like. And if for some reason you do, you can already find out what it is in unmanageable weekly detail. But I like to look back at my own years. Spotify's official forms of nostalgia so far define years purely by listening dates, but as a music geek of a particular sort, what I mean by a year is music that was both made and heard then. New music.

I no longer want to make this list by applying manual reductive retroactive impressions to what I remember of the year, but I also don't have to. Adapting my collective engines to the individual, then, here is the purely data-generated playlist of the new music to which I demonstrated the most actual listening attachment in 2019:

2019 Greatest Hits (for glenn mcdonald)

And for segmented nostalgia, because that's what kind of nostalgist I am, I also used genre metadata and a very small amount of manual tweaking to almost automatically produce three more specialized lists:

Bright Swords in the Void (Metal and metal-adjacent noises, from the floridly melodic to the stochastically apocalyptic.)

Gradient Dissent (Ambient, noise, epicore and other abstract geometries.)

Dancing With Tears (Pop, rock, hip hop and other sentimental forms.)

And finally, although surely this, if anything, will be of interest to absolutely nobody but me, I also used a combination of my own listening, broken down by genre, and the global 2019 genre lists, to produce a list of the songs I missed or intentionally avoided despite their being popular with the fans of my favorite genres.

2019 Greatest Misses (for glenn mcdonald)

I made versions of this Misses list in November and December, to see what I was in danger of missing before the year actually ended, so these songs are the reverse-evolutionary survivors of two generations of augmented remedial listening. But I played it again just now, and it still sounds basically great to me. I'm pretty sure I could spend the next year listening to nothing but songs I missed in 2019 despite trying to hear them all, and it would be just as great in sonic terms. There's something hypothetically comforting in that, at least until I starting trying to figure out what kind of global catastrophe I'm effectively imagining here. I'm alive, but all the musicians in the world are dead? Or there's no surviving technology for recording music, but somehow Spotify servers and the worldwide cell and wifi networks still work?

Easier to live. I now declare 2019 complete and archived. Onwards.

Collect people together, though, and the patterns of their love are sometimes very interesting. For several years I presided computationally over an assembly of nominal expertise, trying to find ways to turn hundreds of opinions into at least plural insights. Hundreds of people is not a lot, though, and asking people to pretend their opinions matter is a dubious way to find out what they really love. I'm not really sad we stopped doing that.

Hundreds of millions of people isn't that much, yet, but it's getting there, and asking people to spend their lives loving all the innumerable things they love is a more realistic proposition than getting them to make short numbered lists on annual deadlines. Finding an individual person who shares your exact taste, in the real world, is not only laborious to the point of preventative difficulty, but maybe not even reliably possible in theory. Finding groups of people in the virtual world who collectively approximate aspects of your taste is, due to the primitive current state of data-transparency, no easier for you.

But it has been my job, for the last few years, to try to figure out algorithmic ways to turn collective love and listening patterns into music insights and experiences. I work at Spotify, so I have extremely good information about what people like in Sweden and Norway, fairly decent information about most of the rest of Europe, the Americas and parts of Asia, and at least glimmers of insight about literally almost everywhere else on Earth. I don't know that much about you, but I know a little bit about a lot of people.

So now I make a lot of lists. Here, in fact, are algorithmically-generated playlists of the songs that defined, united and distinguished the fans and love and new music in 2000+ genres and countries around the world in 2019:

2019 Around the World

You probably don't share my tastes, and this is a pretty weak unifying force for everybody who isn't me, but there are so many stronger ones. Maybe some of the ones that pull on you are represented here. Maybe some of the communities implied and channeled here have been unknowingly incomplete without you. Maybe you have not yet discovered half of the things you will eventually adore. Maybe this is how you find them.

I found a lot of things this year, myself, some of them in this process of trying to find music for other people, and some of them just by listening. You needn't care about what I like. And if for some reason you do, you can already find out what it is in unmanageable weekly detail. But I like to look back at my own years. Spotify's official forms of nostalgia so far define years purely by listening dates, but as a music geek of a particular sort, what I mean by a year is music that was both made and heard then. New music.

I no longer want to make this list by applying manual reductive retroactive impressions to what I remember of the year, but I also don't have to. Adapting my collective engines to the individual, then, here is the purely data-generated playlist of the new music to which I demonstrated the most actual listening attachment in 2019:

2019 Greatest Hits (for glenn mcdonald)

And for segmented nostalgia, because that's what kind of nostalgist I am, I also used genre metadata and a very small amount of manual tweaking to almost automatically produce three more specialized lists:

Bright Swords in the Void (Metal and metal-adjacent noises, from the floridly melodic to the stochastically apocalyptic.)

Gradient Dissent (Ambient, noise, epicore and other abstract geometries.)

Dancing With Tears (Pop, rock, hip hop and other sentimental forms.)

And finally, although surely this, if anything, will be of interest to absolutely nobody but me, I also used a combination of my own listening, broken down by genre, and the global 2019 genre lists, to produce a list of the songs I missed or intentionally avoided despite their being popular with the fans of my favorite genres.

2019 Greatest Misses (for glenn mcdonald)

I made versions of this Misses list in November and December, to see what I was in danger of missing before the year actually ended, so these songs are the reverse-evolutionary survivors of two generations of augmented remedial listening. But I played it again just now, and it still sounds basically great to me. I'm pretty sure I could spend the next year listening to nothing but songs I missed in 2019 despite trying to hear them all, and it would be just as great in sonic terms. There's something hypothetically comforting in that, at least until I starting trying to figure out what kind of global catastrophe I'm effectively imagining here. I'm alive, but all the musicians in the world are dead? Or there's no surviving technology for recording music, but somehow Spotify servers and the worldwide cell and wifi networks still work?

Easier to live. I now declare 2019 complete and archived. Onwards.

¶ If You Do That, the Robots Win · 16 April 2016 essay/listen/tech

[This is the script from a talk I delivered at the EMP Pop Conference today. It was written to be read aloud at an intentionally headlong pace, with somewhat-carefully timed blasts of interstitial music. I've included playable clip-links for the songs here, but the clips are usually from the middles of the songs, and I was playing the beginnings of them in the talk, so it's different. The whole playlist is here, although playing it as a standalone thing would make no sense at all.]

I used to take software jobs to be able to buy records, but buying records is now a way to hear all the world's music like collecting cars is a way to see more of the solar system.

So now I work at Spotify as a zookeeper for playlist-making robots. Recommendation robots have existed for a while now, but people have mostly used them for shopping. Go find me things I might want to buy. "You bought a snorkel, maybe you'd like to buy these other snorkels?"

But what streaming music makes possible, which online music stores did not, is actual programmed music experiences. Instead of trying to sell you more snorkels, these robots can take you out to swim around with the funny-looking fish.

And as robots begin to craft your actual listening experience, it is reasonable, and maybe even morally imperative, to ask if a playlist robot can have an authorial voice, and, if so, what it is?

The answer is: No. Robots have no taste, no agenda, no soul, no self. Moreover, there is no robot. I talk about robots because it's funny and gives you something you can picture, but that's not how anything really happens.

How everything really happens is this: people listen to songs. Different people listen to different songs, and we count which ones, and then try to use computers to do math to find patterns in these numbers. That's what my job actually involves. I go to work, I sit down at my desk (except I actually stand at my fancy Spotify standing desk, because I heard that sitting will kill you and if you die you miss a lot of new releases), and I type computer programs that count the actions of human listeners and do math and produce lists of songs.

So when anybody talks about a fight between machines and humans in music recommendation, you should know that those people do not know what the fuck they are talking about. Music recommendations are machines "versus" humans in the same way that omelets are spatulas "versus" eggs.

So the good news is that you can stop worrying that robots are trying to poison your listening. But the bad news is that you can start worrying about food safety and whether the people operating your spatulas have the faintest idea what food is supposed to taste like.

Because data makes some amazing things possible, but it also makes terrible, incoherent, counter-productive things possible. And I'm going to tell you about some of them.

Counting is the most basic kind of math, and yet even just counting things usefully, in music streaming, is harder than you probably think. For example, this is the most streamed track by the most streamed artist on Spotify:

Various Artists "Kelly Clarkson on Annie Lennox"

Do you recognize the band? They are called "Various Artists", and that is their song "Kelly Clarkson on Annie Lennox", from their album Women in Music - 2015 Stories.

But OK, that's obviously not what we meant. We just need to exclude short commentary tracks, and then this is the most streamed music track by the most streamed artist on Spotify:

Various Artists "El Preso"

Except that's "Various Artists" again. The most streamed music track by an actual artist on Spotify is:

Rihanna "Work"

OK, so that's starting to make some sense. Pretty much all exercises in programmatic music discovery begin with this: can you "discover" Rihanna?

Spotify just launched in Indonesia, and I happen to know that Indonesian music is awesome, because there are people there and they make music, so let's find out what the most popular Indonesian song is.

Justin Bieber "Love Yourself"

I kind of wanted to know what the most popular Indonesian song is, not just the song that is most popular in Indonesia. But if I restrict my query to artists whose country of origin is Indonesia, I get this:

Isyana Sarasvati "Kau Adalah"

Which seems like it might be the Indonesian Lisa Loeb. It's by Isyana Sarasvati, and I looked her up, and she is Indonesian! She's 23, and her Wikipedia page discusses the scholarship she got from the government of Singapore to study music at an academy there, and lists her solo recitals.

It turns out that our data about where artists are from is decent where we have it, but a lot of times we just don't. 34 of the top 100 songs in Indonesia are by artists for whom we don't have locations.